AI governance you can

truly control.

AI stopped being a toy for the innovation lab long ago. Every department now fires prompts into the cloud. And that is precisely where it hurts: how do you keep track of risks, data flows and new regulations as the AI Act risk and control framework keeps expanding? Spartner combines governance, risk assurance and everyday operations in one clear platform so IT and the business can breathe again.

What is at stake?

Without structure, data, accountability and compliance descend into chaos.

Automating

Every employee invents their own prompt, so unscreened data flows into GPT-like models. Result: data leaks, reputational damage, angry regulators.

Integrating

A proliferation of chatbot tools creates silent silos while what you really need are uniform quality standards and audit trails.

Innovating

When governance is watertight you can roll out new AI features faster because you no longer have to renegotiate the legal details every time.

Safeguarding

From risk classification to log archiving, a control library makes compliance with the AI Act risk and control framework demonstrable.

Why a central approach works

A sushi chef cuts fish with one knife, not ten, because consistency saves the flavour. The same applies to AI governance: one platform, one source of truth prevents a sour aftertaste with auditors.

Incidentally, we notice that organisations often realise very late just how many departments run their own AI scripts.

That said, once all requests run through a single gateway you immediately see patterns, errors and opportunities emerge.

In fact, real-time dashboards enable security teams to intervene before the regulator even asks a question.

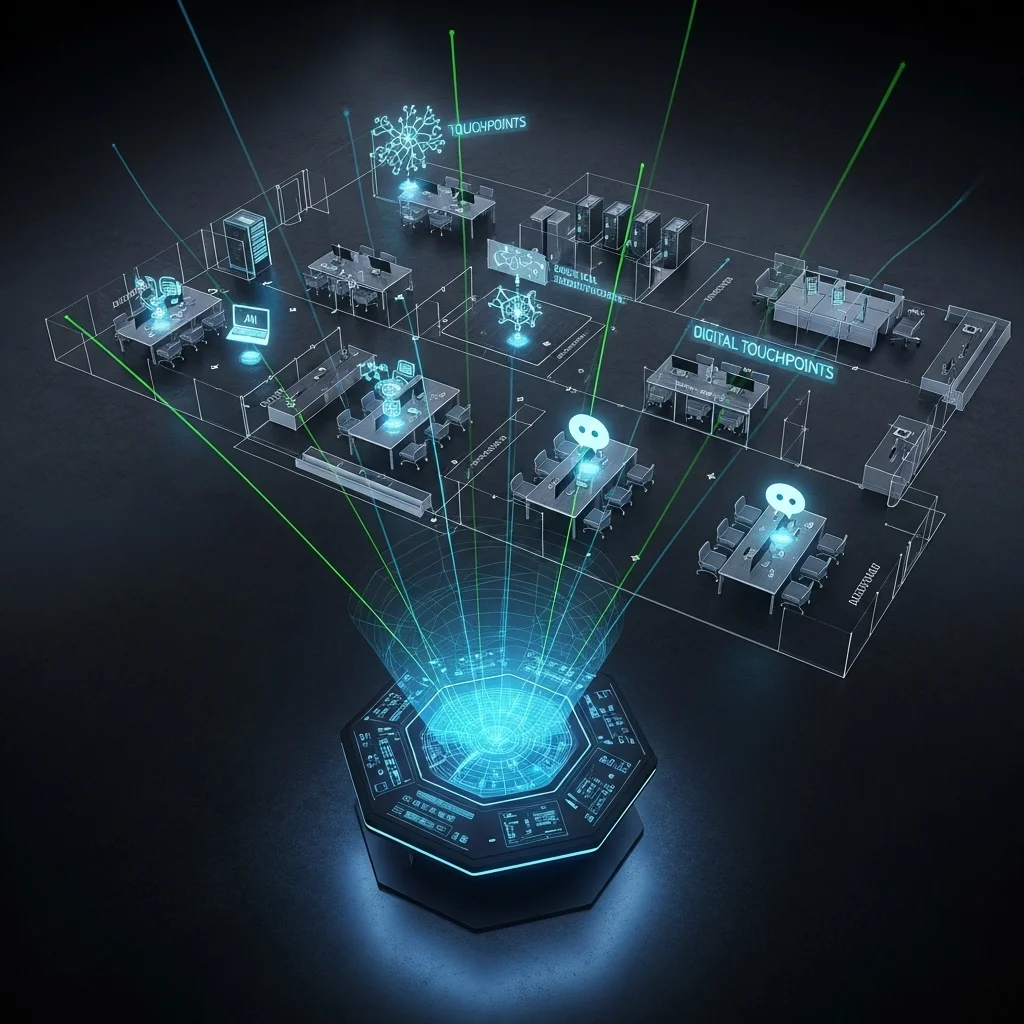

Full insight into prompts and data flows

Automatic classification according to AI Act risk levels

Reports auditors understand without a technical deep dive

Integrations with existing service desk and chatbot workflows

Step by step towards robust AI governance.

Follow these steps to get AI governance in order quickly and demonstrably.

Step 1: Inventory.

We start with a scan of all current AI touch points. We are often surprised that companies forget about half of them, especially the no-code tools marketing 'just quickly' switched on.

Step 2: Classify.

Every use case is placed in a risk assurance matrix: low, medium, high or prohibited. So it is immediately clear which controls are mandatory.

Step 3: Embed.

Next we connect Spartner Mind to your identity platform. A solid governance framework stands or falls with proper authentication and role management.

Step 4: Monitor & alert.

Dashboards show who requested what and when, and which data flows to external LLMs. Does something spike? We automatically tap the digital knuckles.

Step 5: Report.

Monthly reports align seamlessly with the AI Act risk and control framework so compliance teams save time and auditors leave with a smile.

Step 6: Continuous improvement.

Regulations change, employees find new tricks; we adjust policies and models without disrupting your daily work.

The philosophical layer of AI governance

Freedom versus responsibility

AI can unleash creativity, but without boundaries that freedom turns into arbitrariness. As I mentioned earlier, governance is the compass that sets the course.

Who is steering the machine?

People build models, but models influence people in return. That is why we see governance not as a brake, but as a guardrail on a winding mountain road.

Transparency is not a luxury

People often say "black box" and shrug. At Spartner we demand logic traces so decisions remain explainable, even if they feel a bit complex today.

Getting hands-on with controls

Practical tips you can apply tomorrow

Set up a single proxy endpoint for all AI API calls.

Log full prompts and responses, but pseudonymise sensitive fields.

Link risk labels to user roles so high-risk requests require review in advance.

Apply retention periods; not every log needs to live forever.

Have legal review every model update within your chatbot.

H3 Subsection

In practice we see many organisations store only the user input, not the model's answer, even though that answer may contain the actual risk (e.g. incorrect advice).

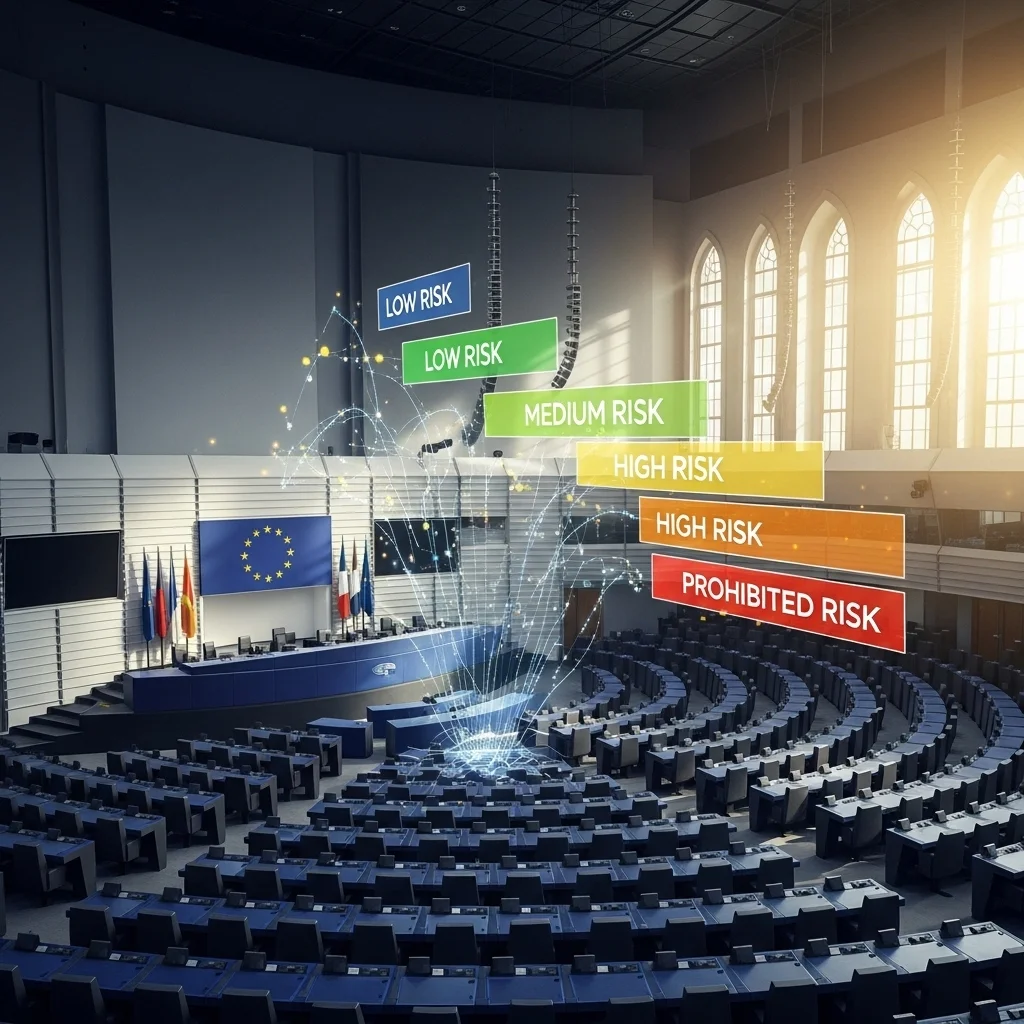

A critical look at the AI Act

Rules in motion, so is your organisation

The AI Act risk and control framework distinguishes four risk classes. High-risk systems (think HR selection) demand rigorous governance; generative chatbots may rank lower yet can still escalate.

Uncertainties remain

Not all secondary legislation has been finalised, so build governance modularly. Today label X, tomorrow perhaps X+.

Never too late to start

Waiting for the final texts sounds sensible, but it will leave you behind. By setting up risk assurance processes now you will save months later.

Ready to get AI under control?

Book a no-obligation session with our governance specialists and discover how quickly you can align risks, controls and innovation. We listen, ask critical questions and show live how Spartner Mind tames your AI landscape.

Frequently asked questions

Practical answers to the questions we hear most often.

What exactly is AI governance? 🤔

AI governance is the set of processes, rules and tools that allow you to develop and use AI responsibly. Think risk assurance, compliance checks and continuous monitoring.

Do I need a separate team for governance? 🧐

Not necessarily. We see organisations succeed with a cross-functional squad where legal, IT and the business decide together. Spartner Mind supports that collaboration.

Can I keep using my existing chatbots? 💬

Yes. Through standard APIs we integrate popular platforms so your current investments remain intact while governance layers are added on top.

How does this relate to GDPR? 📜

The AI Act complements GDPR. Our tooling safeguards both: privacy filters and AI-specific controls such as explainability logs.

Is everything automatically high risk under the AI Act? 😅

Certainly not. Most internal productivity bots fall lower. But as soon as decisions impact people's lives or rights, the risk level rises.

What will an audit cost me if I do nothing? 💸

A lot. Fines can reach percentages of global turnover. Better avoid that by implementing governance in time.

How quickly can Spartner Mind go live? ⏱️

We remain vague about exact weeks, but thanks to standard integrations a proof of value is surprisingly fast.

Will my IT team get extra work? 🤖

At first there will be some coordination, afterwards far fewer fires to put out. Automation saves ad-hoc tickets.

Does the platform support multiple LLM vendors? 🌐

Absolutely, we talk to OpenAI, Azure OpenAI, Google Gemini and even on-premise Llama clusters.

Am I not confusing governance with innovation blockers? 🚀

On the contrary. With clear ground rules you dare to experiment faster because everyone knows where the boundaries are.

.webp)