OpenAI and Foxconn team up to build AI datacentre hardware in the US

OpenAI and hardware giant Foxconn have entered into a strategic partnership to design and manufacture custom AI datacentre hardware in the United States. The deal forms part of a broader global race for compute power, in which hyperscalers, chip manufacturers and cloud providers are investing billions in infrastructure for large-scale AI models.

OpenAI wants more control over its hardware stack

Until now, OpenAI has relied heavily on existing cloud partners and standard datacentre architectures to roll out GPT-5 and its successors. The collaboration with Foxconn marks a step towards greater vertical integration of its own infrastructure.

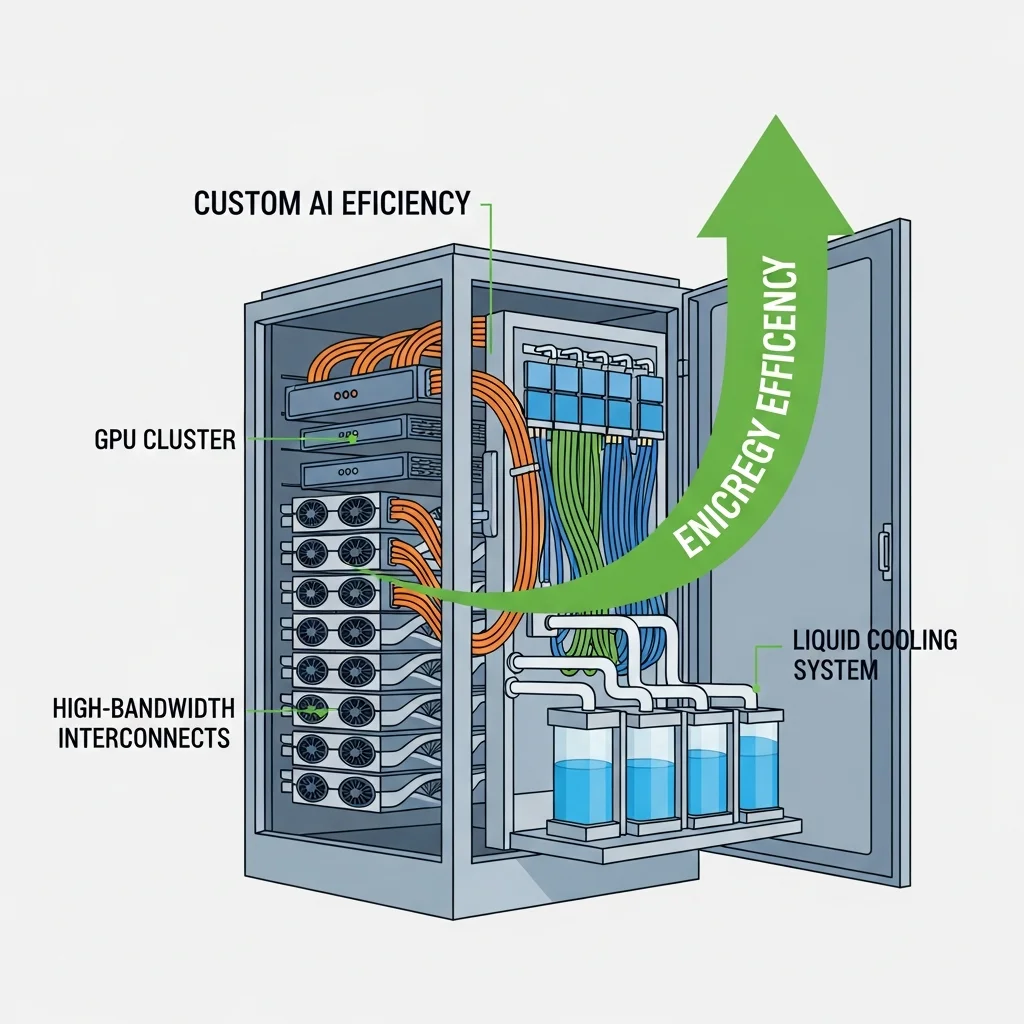

Instead of remaining fully dependent on generic servers and off-the-shelf GPU clusters, the companies are working on bespoke racks, cooling and power delivery that are optimised for large-scale training and inference loads. Think high-density GPU configurations, improved cooling to allow more power per rack and granular monitoring of power usage and temperature.

For the AI market as a whole, this reinforces how central hardware optimisation has become. It is no longer just about the model; the efficiency with which every watt and every GPU minute is used also counts.

Foxconn strengthens its position in AI infrastructure

Foxconn is best known for producing iPhones and other consumer electronics, but in recent years it has been steadily building a bigger role in datacentre and server hardware. By partnering with a leading AI player, Foxconn gains direct insight into the specific requirements of large-scale AI workloads.

This moves the company further towards the heart of the AI economy. Instead of merely assembling generic servers, Foxconn can now design specialised AI racks and complete datacentre solutions, including customised cooling, power and mechanics for heavy GPU and accelerator cards.

For the United States, the partnership also strengthens domestic production of AI infrastructure. Part of the production and integration of critical hardware will physically take place on US soil, which is important in the context of geopolitical tensions and export restrictions around high-end chips.

What it means for the global compute power race

Worldwide demand for AI compute is exploding. Large language models are getting bigger, multimodal models process text, images, audio and video simultaneously, and more and more companies want to train or host their own specialised models.

Availability of advanced chips such as Nvidia H200 and its successors.

The efficiency and scalability of the datacentre architecture.

The extent to which companies can tailor their infrastructure to their specific AI workloads.

The partnership between OpenAI and Foxconn mainly addresses the second and third points. By developing custom hardware, OpenAI can:

Improve the energy efficiency per model run.

Reduce latency for inference workloads.

Increase datacentre capacity without consuming exponentially more space or power.

For developers and businesses that use OpenAI's AI services, this could eventually lead to more stable capacity, faster response times and possibly new types of services that are only viable with highly optimised infrastructure.

Impact on European and Dutch AI strategies

Although this collaboration initially targets the United States, it also indirectly affects Europe and the Netherlands. The concentration of AI compute with a handful of major players continues to grow, while the underlying hardware stack becomes increasingly specific and proprietary.

This raises two strategic questions for European policymakers and companies:

How do you avoid becoming overly dependent on a small number of foreign infrastructure suppliers?

What role does Europe want to play in its own AI datacentres, chip production and open hardware standards?

In the Netherlands, an additional question is how digital infrastructure, energy policy and AI innovation can be aligned. AI datacentres demand large amounts of electricity and cooling, while governments are simultaneously steering towards sustainability and the spatial integration of new datacentre locations.

The move by OpenAI and Foxconn makes it clear that it is no longer just about software and algorithms. Whoever controls the hardware enjoys a powerful position in the AI value chain.

Expected next steps and market movements

The partnership could trigger a domino effect in the AI infrastructure market. Other major AI players may respond with their own hardware initiatives or deepen existing partnerships with chip and server manufacturers.

Possible next steps include:

New generations of AI servers that are specifically optimised for multimodal models and agentic AI.

More finely tuned combinations of GPUs, networking components and storage aimed at minimising training and inference bottlenecks.

Further integration of energy management, for instance by directly using renewable sources or recovering heat from datacentres.

For now, the news mainly underlines that AI is increasingly becoming a game of infrastructure. The race for the best models is tightly intertwined with the race for the most efficient and scalable hardware.