Microsoft, NVIDIA and Anthropic strike mega deal, Claude now live on Azure

A new strategic partnership between Microsoft, NVIDIA and Anthropic sets the tone for the next phase of generative AI. The three companies are combining cloud infrastructure, GPU capacity and frontier models to make Claude widely available on Azure, backed by multi-billion-dollar investments in compute power and long-term purchase commitments.

---

Summary

Microsoft, NVIDIA and Anthropic have announced a large-scale collaboration that further strengthens Azure's position as an AI cloud. Anthropic is scaling its Claude models on Azure with NVIDIA hardware, while Microsoft commits to substantial long-term investments in GPU capacity and AI infrastructure. The deal highlights the shift towards specialised AI cloud stacks and gives organisations more choice when working with multiple frontier models.

---

Strategic alliance between three AI heavyweights

The core of the announced collaboration can be summed up quite simply: Anthropic supplies the frontier models, NVIDIA the specialised AI hardware and Microsoft the hyperscale cloud environment that brings the two together. Reports speak of billions in investments and compute commitments that should enable Anthropic to scale Claude aggressively.

For Microsoft, this means a further broadening of the AI offering within Azure. In addition to its own models and the existing partnership with OpenAI, Azure customers now gain access to Anthropic Claude through the familiar cloud environment and tooling. This turns Azure into a platform where several leading LLM families can run side by side.

For NVIDIA, this is yet another confirmation of its dominant role in the AI hardware landscape. The deal shows that large-scale AI providers are trying to secure their future capacity needs early, signing long-term agreements for GPU deliveries and data-centre integration.

---

Claude as a fully-fledged alternative in the enterprise cloud

With Claude now available on Azure, competition between frontier models will be felt more keenly within the enterprise market. Anthropic has long positioned Claude as a model with strong reasoning abilities, rigorous safety guardrails and robust support for complex tasks.

advanced code assistance and software development

complex search and analytics tasks on enterprise data

multi-step agents and workflows

document analysis, summarisation and knowledge retrieval

Importantly, this step fits into a broader trend in which major cloud platforms are betting on model diversity. Instead of a single AI ecosystem, the market is becoming increasingly multi-vendor, with freedom of choice and combinations of models centre stage.

---

Implications for the global AI infrastructure

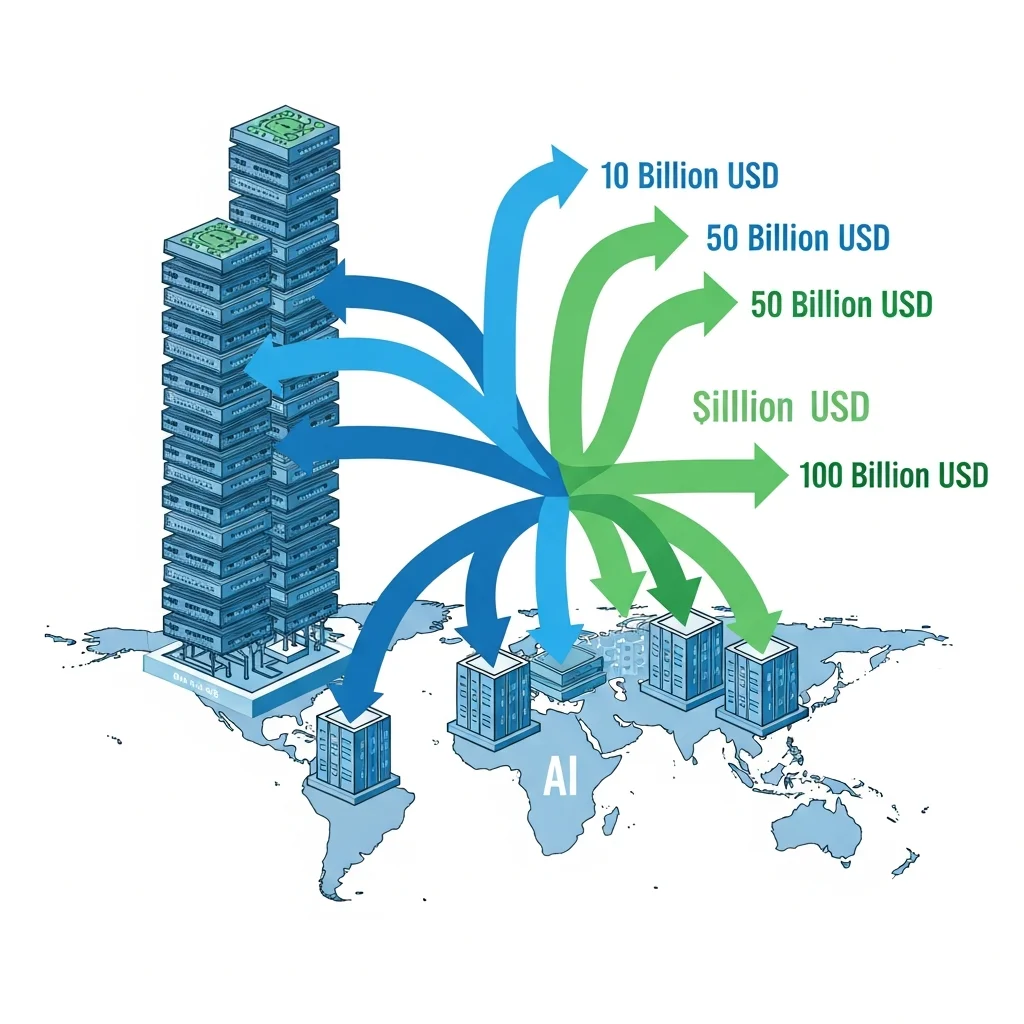

The scale of the announced investments makes it clear that AI infrastructure is evolving into its own category alongside traditional cloud capacity. Multi-billion-dollar investments in GPU clusters, specialised network architectures and cooling are required to train and run the next generation of models.

The collaboration between Microsoft, NVIDIA and Anthropic increases the pressure on competitors to sign similar long-term deals. The big hyperscalers are trying to secure their position by:

pre-booking compute via multi-year contracts

securing exclusive or early access to frontier models

enriching their platforms with specialised AI services and tooling

For end users and businesses this means on the one hand more capacity and faster access to new models, but on the other a dependency on a small number of very well-capitalised players in the infrastructure chain.

---

Compliance, safety and AI governance under the spotlight

In addition to technology and infrastructure, governance also plays a prominent role in the collaboration. Anthropic explicitly positions itself as a company focused on safety, interpretability and the controlled roll-out of powerful models. In an enterprise environment such as Azure this carries extra weight, because organisations must comply with regulations around privacy, data security and responsible AI use.

The combination of a safety-oriented model supplier with a cloud platform that offers extensive compliance tooling makes it possible to:

link use of Claude to existing identity and access management solutions

control data residency and storage locations within Azure regions

set up audit trails, logging and monitoring around LLM usage

implement sector-specific policies, for example in finance, healthcare or government

At the same time, pressure from regulators to enforce transparency about training data, model behaviour and risk-mitigation measures is mounting. High-profile deals like this will therefore be closely watched by policy-makers in the EU, US and Asia.

---

What this means for organisations looking to scale with AI

For organisations currently adopting generative AI, this news underlines several concrete movements in the market. First, it is clear that the major cloud platforms will continue to invest heavily in multiple model partners simultaneously. Second, the expectation is growing that serious AI solutions will be built on specialised infrastructure with high compute power.

At the same time, there is still room for individual choices around architecture, governance and model strategy. Organisations would be well advised to design their AI landscape so that they:

are not locked into a single model but can switch between different providers

have clear frameworks for data governance and access control

can weigh performance, cost and quality for each use case

can integrate future innovations, such as new generations of Claude or other models, relatively easily

This new collaboration between Microsoft, NVIDIA and Anthropic shows that the market is rapidly evolving towards a mature ecosystem with multiple strong models, powerful infrastructure and an expanding package of enterprise features around governance and compliance.