Generative AI is a form of artificial intelligence that can create new data, such as text, images, audio or code, based on patterns it has learned from existing information.

Generative AI overview

Generative AI is a branch of artificial intelligence that focuses on automatically creating new content. Instead of merely making predictions or classifications, generative AI produces fresh text, images, video, audio or source code based on patterns learned from large datasets.

Key topics in this article include the definition of generative AI, the underlying models and techniques, the main areas of application, and the ethical and legal considerations. We also briefly discuss developments in regulation and safety.

By the end of this article you will have a structured understanding of:

What generative AI is and how it relates to other forms of AI

Which model types are widely used, such as large language models and generative image models

Important business and technical applications of generative AI

Risks surrounding privacy, bias and output reliability

The role of regulation and guidelines when using generative AI

Understanding and defining generative AI

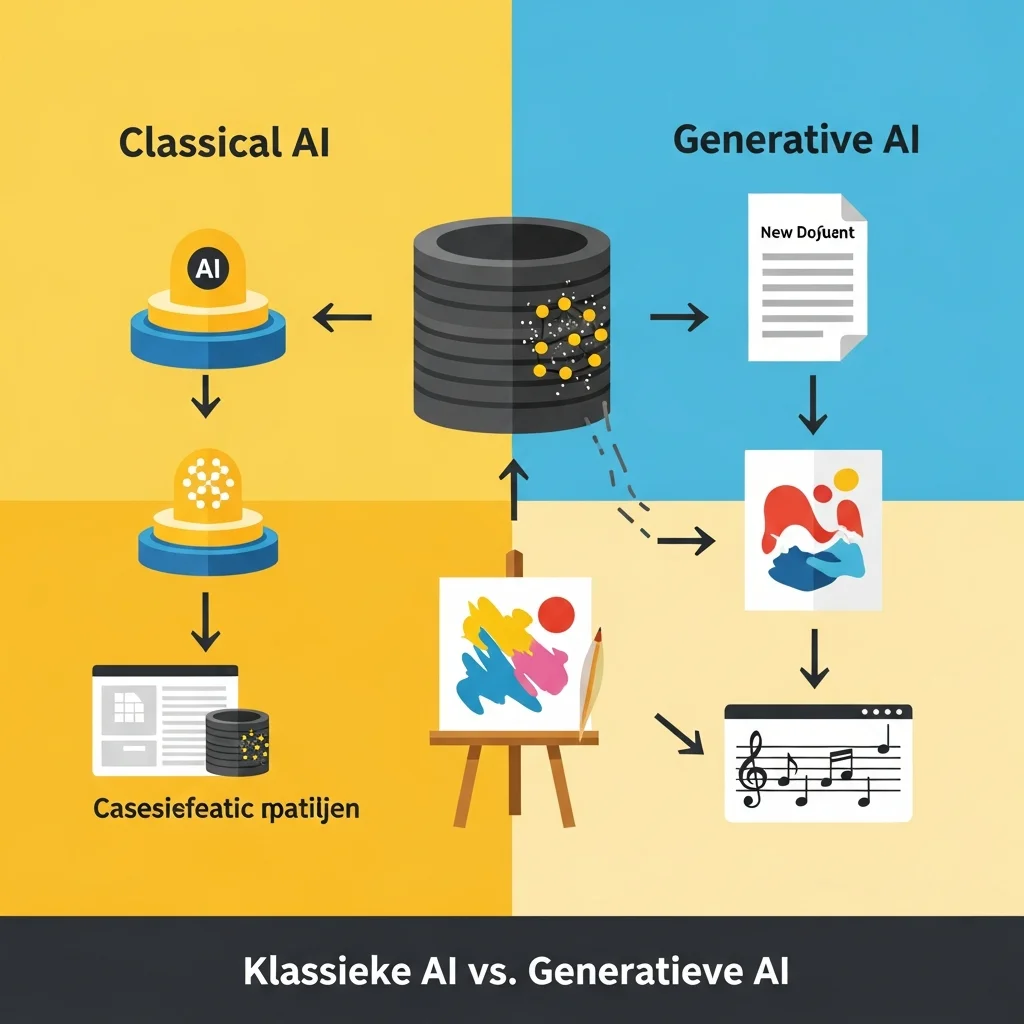

Generative AI is an umbrella term for algorithms capable of generating new data that resemble the data on which they were trained. Whereas traditional AI models often focus on classification or prediction, such as detecting spam or estimating a price, generative AI is creative in the sense that it produces new examples.

In practice, this means a generative model can, for instance, write a new piece of natural-language text, create an image based on a description, compose a short music clip or generate source code that solves a particular problem. This output is not copied verbatim from the training data; it is a statistical reconstruction based on learned patterns.

Generative AI typically relies on deep neural networks that can model complex relationships in data. Well-known examples include large language models that generate text, image models that create photorealistic pictures from text prompts, and multimodal models that combine text, images, audio and video.

A user-friendly video introduction to generative AI can be found here, for example:

Models and architectures within generative AI

Generative AI uses various model architectures, each with its own strengths. A key concept is probabilistic modelling, where the model learns which patterns and combinations of features are likely to occur in the training data.

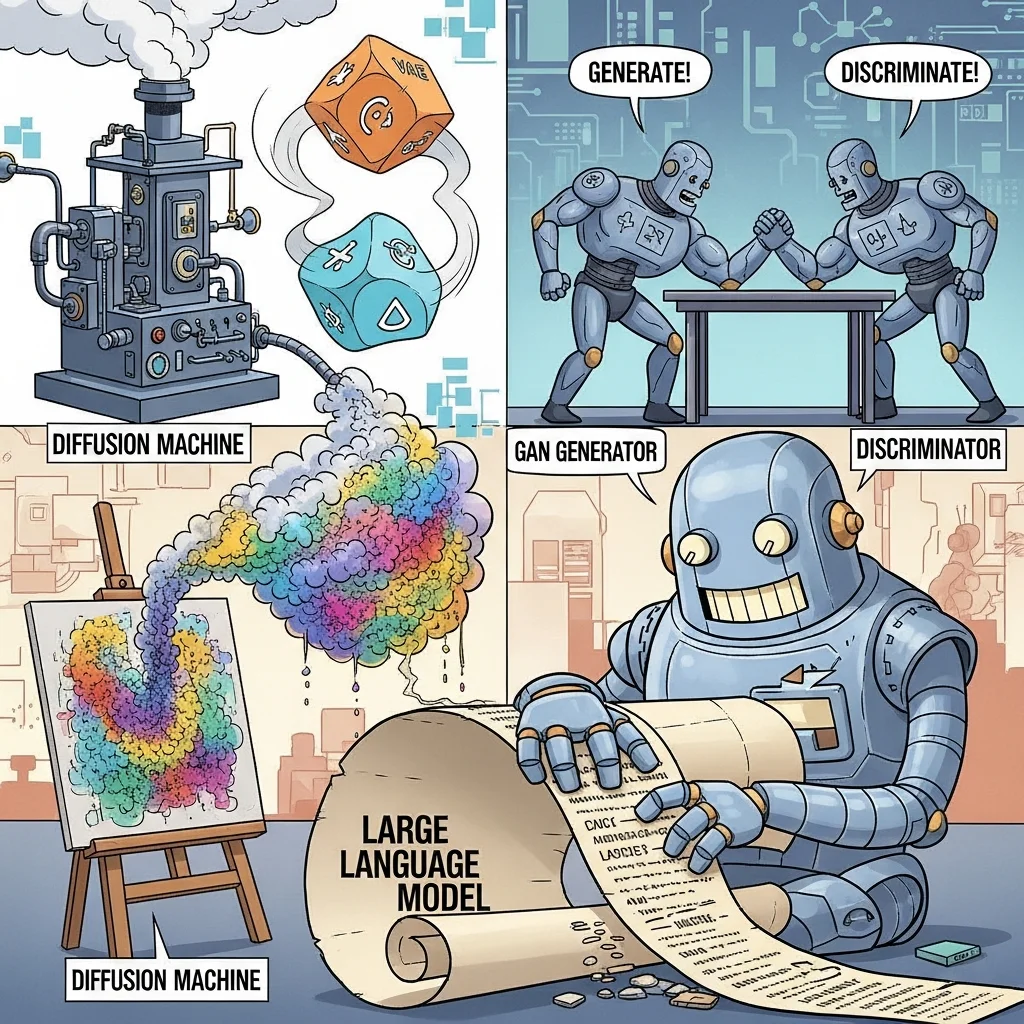

A first major category is autoregressive models for text. These models predict the next word or token in a sequence step by step. Large language models belong to this category. Trained on vast amounts of text, they learn patterns in grammar, semantics, style and knowledge. By iteratively predicting the next token, they generate complete sentences and documents.

Another category comprises generative models for images and video, with diffusion models being particularly important. These models learn how to turn noise into meaningful images. During generation they start with random noise and refine it over multiple steps into a coherent picture that matches the supplied description. For video, a similar process is applied across consecutive frames.

There are also variational autoencoders and generative adversarial networks. A variational autoencoder compresses input data into a latent representation and learns to reconstruct it. By varying within that latent space, new examples can be generated. A generative adversarial network consists of a generator and a discriminator that improve each other during training: the generator tries to produce realistic data, while the discriminator tries to tell real from fake.

More recently, multimodal foundation models have gained prominence. These large models are trained on multiple data types at once, for example text, images and audio. They can answer textual questions about images, generate descriptions for video, or convert spoken text into written text and analyse it immediately.

Applications and use cases for generative AI

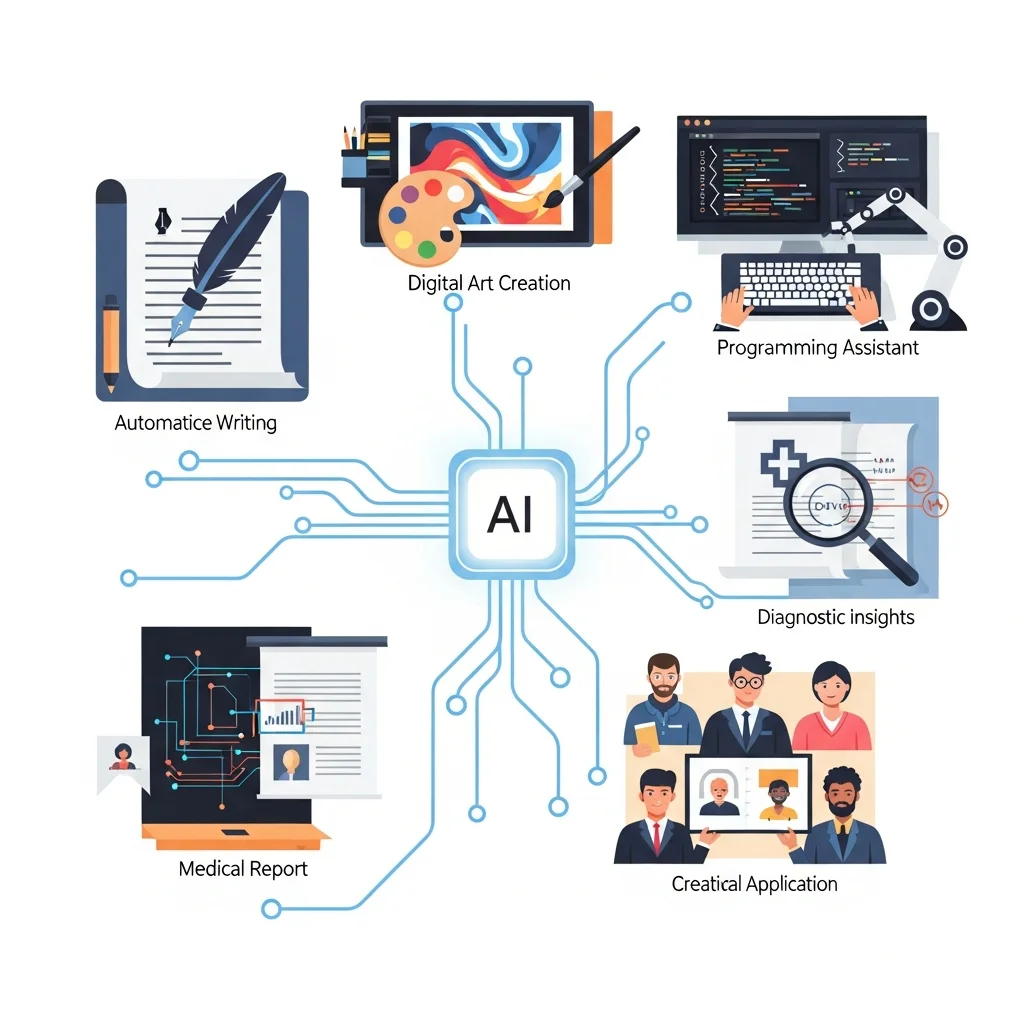

Generative AI is used across a wide range of domains, from consumer products to business-critical applications. In text processing, generative AI helps draft emails, reports and summaries. Models can analyse lengthy documents and produce concise overviews, or assist in structuring complex information.

In the creative sector, generative AI supports the creation of images, illustrations and video for marketing, entertainment and design. Text-to-image models translate descriptions into visuals that can then be further edited or combined. For video, generative models are used to create short clips, generate animations or automatically adapt audiovisual content to different audiences.

Generative AI has also found a clear role in software development. Code-generation models assist developers in writing source code, refactoring existing code and explaining complex algorithms. Rather than producing raw code without context, modern tools are often integrated into development environments where they provide suggestions based on the codebase and documentation.

Sectors such as healthcare, law and finance are increasingly exploring generative AI for document analysis, scenario simulation and knowledge retrieval. Here, requirements around privacy, reliability and auditability are paramount. Regulators and supervisors stress that generative AI should only be used as a supportive tool, with human professionals remaining responsible for final decisions.

Risks, limitations and regulation of generative AI

Generative AI has significant limitations and risks that must be carefully managed. A core issue is that the output is statistically plausible but not guaranteed to be true or correct, a phenomenon known as hallucination. For example, a language model may produce convincing yet incorrect facts or invent fictitious references. In critical contexts, generative output is therefore always reviewed by a human or combined with reliable data sources.

Generative models can also inherit or amplify existing biases in their training data. If certain groups or perspectives are under-represented, the generated text or images may contain bias. This raises concerns about fairness and non-discrimination. Curating training data, adding safety layers and conducting explicit evaluations all help mitigate these risks, although achieving complete neutrality is challenging in practice.

Privacy and intellectual property present further concerns. Training data may contain personal information or copyrighted content, raising questions about the legality of collecting and using such data, as well as the status of generated output. Various jurisdictions are working on frameworks governing how generative AI may be developed and used, including requirements for transparency and attribution.

Regulation and guidelines are playing an ever-greater role. Policymakers emphasise the need for explainability, risk assessment and appropriate human oversight, especially in high-risk applications such as medical decisions or the assessment of individuals. Organisations are also encouraged to establish internal governance for the use of generative AI, for instance through data-protection policies, ethical reviews and incident reporting.

What is generative AI in simple terms?

Generative AI is a form of artificial intelligence that can create new content—such as text, images or music—that resembles the examples in its training data. The model learns patterns from large amounts of information and uses that knowledge to generate new variants that are not direct copies. 🙂

How does generative AI differ from classical AI systems?

Classical AI systems often focus on classification or prediction, such as recognising objects in an image or forecasting a number. Generative AI, by contrast, produces new examples—for instance a wholly new image or a passage of text. The underlying techniques are related, but the goal is different: creation rather than mere recognition.

What types of generative AI models exist?

Common generative models include large language models for text, diffusion models for images and video, variational autoencoders and generative adversarial networks. There are also multimodal foundation models that work with multiple data types such as text, images and audio. These models share the characteristic of learning probabilistic patterns from data and using them to generate new output.

Is the output of generative AI always reliable and factually correct?

No. Generative AI produces statistically plausible content, but it is not automatically true or complete. Models can fabricate information or mix up details, a behaviour known as hallucination. Human oversight is therefore essential, especially in legal, financial or medical settings. In practice, generative systems are often combined with trustworthy data sources to better verify facts.

What risks are associated with using generative AI?

Key risks include inaccurate or misleading output, the inheritance or amplification of bias from training data, potential breaches of privacy or copyright, and misuse scenarios such as deepfakes. Organisations may also become dependent on systems that are not fully explainable. These risks require technical mitigation, clear usage guidelines and appropriate governance.

Can generative AI be used for sensitive information, such as patient records or legal files?

Using generative AI with sensitive information demands strict safeguards regarding privacy, security and the lawful processing of data. Additional rules often apply to special categories of personal data and proprietary information. Regulators in many sectors recommend deploying generative AI only as a supportive tool, always in combination with human review. Experience across domains shows that a careful, step-by-step approach is the most responsible.

How does generative AI relate to future regulation?

AI regulation is evolving rapidly and often contains specific provisions for generative systems. Expected obligations include transparency about AI use, clear labelling that content has been AI-generated, risk assessments and measures to prevent misuse. Organisations deploying generative AI are encouraged to establish internal policies and documentation now, so they can demonstrate responsible use of the technology.

Which skills are needed to work effectively with generative AI?

Users need a basic understanding of how generative models work, knowledge of their limitations and risks, and the ability to craft clear prompts—often called prompt engineering. Domain expertise and critical thinking are also important, as people must evaluate and refine the generated output. In technical roles, additional skills such as machine learning, data engineering and security are required to develop and integrate systems responsibly.