Large Language Models (LLMs)

Overview

Large Language Models (LLMs) are a category of generative AI models trained to understand and produce natural language. They rely on statistical patterns in vast text corpora and use neural network architectures, usually transformers, to predict and generate text based on a given input. LLMs are used for a wide range of tasks, such as chat interfaces, translation, summarisation, code generation, search functionality and content creation.

Since around 2020, LLMs have become a core technology within artificial intelligence. Thanks to scaling in model parameters, training data and compute power, combined with optimisation techniques and instruction tuning, LLMs have reached a level where they perform on par with—or better than—classical rule-based or narrow machine-learning systems for many text-based tasks. At the same time, they introduce new challenges in reliability, interpretability, safety and ethics.

Definition and key concepts

A large language model is a probabilistic model that, given a sequence of tokens (words, sub-words or characters), predicts the probability distribution of the next token in the sequence. By repeatedly predicting the next token and appending it to the context, the model can generate seemingly coherent text of arbitrary length. The term "large" generally refers to the scale of the model—hundreds of millions to hundreds of billions of parameters—and the extremely large training datasets.

Important LLM concepts include:

Token: the elementary unit of text on which the model is trained, often a sub-word segment or character.

Parameters: the weights in the neural network learned during training.

Context window: the maximum number of tokens the model can process at once. Modern models have context windows reaching into the hundreds of thousands of tokens.

Prompt: the textual input provided by the user, including instructions, examples and context.

Logits and

softmax: internal representations the model uses to assign probabilities to possible next tokens.

Architecture and operation

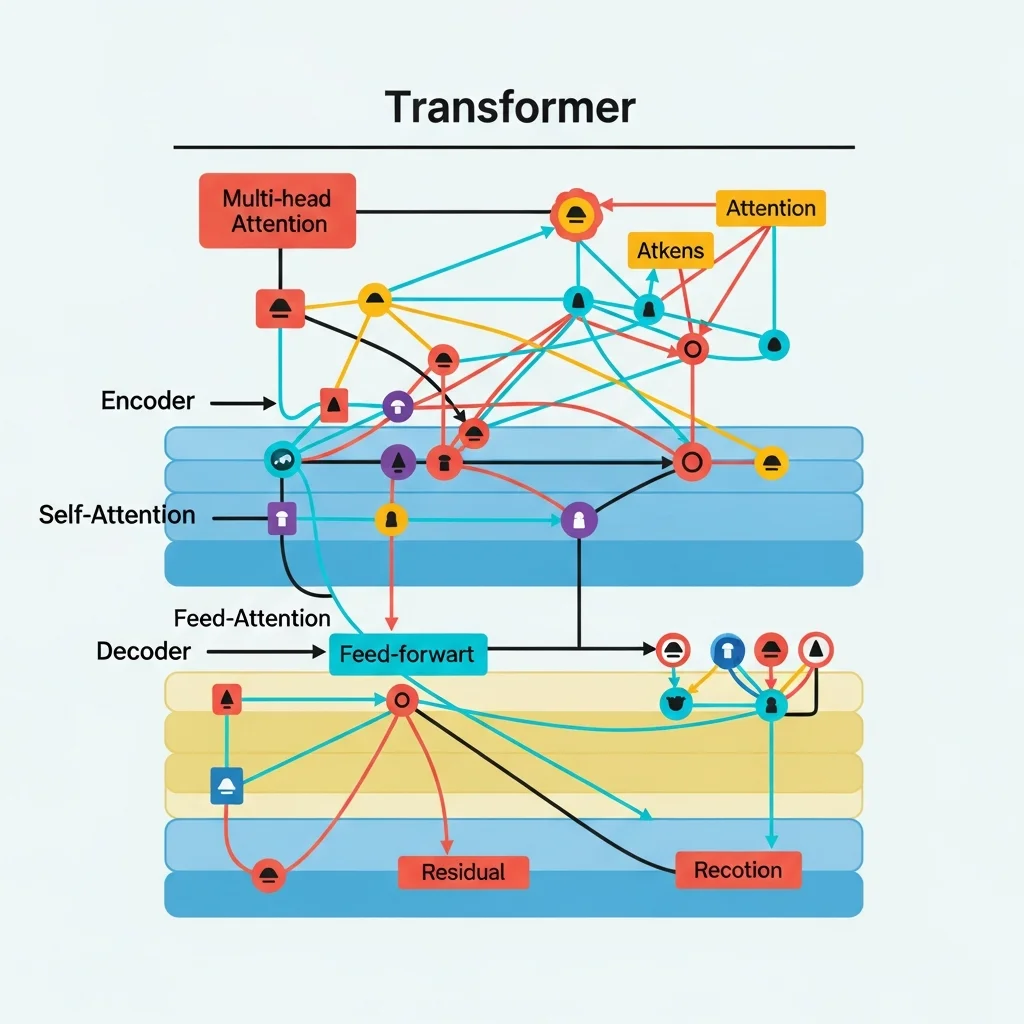

Transformer architecture

Most modern LLMs are based on the transformer architecture. This architecture uses self-attention to model relationships between tokens in a sequence. Unlike earlier recurrent networks that process tokens sequentially, a transformer can process all tokens in the input in parallel and model their inter-dependencies explicitly.

Self-attention works by computing three representations for each token—known as queries, keys and values. By computing similarities between queries and keys, the model can determine which other tokens are important when processing a specific token. The values are weighted based on these similarities and then combined to form a new representation. This mechanism is stacked across multiple layers, often combined with feed-forward network layers and normalisation techniques.

Training process

The training process of an LLM typically consists of two main phases. In the first phase, pre-training, the model is taught to predict the next token in a very large text corpus. This is done via self-supervision, without explicit labels, because the text itself provides the training targets. The standard training objective is a language-model objective, where the model learns to maximise the probability of the correct subsequent tokens and minimise the training loss (e.g. cross-entropy).

In the second phase—often called fine-tuning or instruction tuning—the model is adapted for specific tasks or use cases. The model may, for example, be trained on question-answer pairs, dialogue data or domain-specific documents. Modern LLMs are frequently refined further using techniques that combine human feedback with reinforcement learning or similar methods. These techniques aim to align the output more closely with human preferences, safety requirements and task relevance.

Inference and sampling

During use—also called inference—the model receives a prompt as input and generates output tokens one by one. For each step the model computes a probability distribution over all possible tokens. The actual choice can be deterministic, for instance by always selecting the most probable token, or stochastic via sampling.

When sampling, techniques such as temperature, top-k sampling and top-p sampling are used to balance predictability and creativity. Lower temperature values and restrictive sampling settings yield predictable but potentially less creative text, whereas higher values produce more variation and sometimes unexpected results.

Applications

LLMs are used across a wide spectrum of domains in which language or code is central. Common applications include:

Conversation and chat interfaces, for example customer service, internal helpdesks or personal assistants.

Text generation, such as writing articles, reports, product descriptions or marketing copy.

Summarising documents, emails, legal texts or research papers.

Translation between natural languages.

Code assistance, such as generating, explaining or refactoring programme code.

Information retrieval and search functionality, often combined with retrieval techniques that consult external data sources.

Analysis of sentiment, intent and entities in text.

In many modern systems, LLMs are combined with additional components. Examples include retrieval-augmented generation, where the model retrieves documents in real time and includes them in the context, and tool use, where the model calls external functions, such as databases, calculators or internal APIs. This increases the timeliness and accuracy of answers because the model does not have to rely solely on the knowledge stored in its parameters during training.

Model types and examples

Large language models come in different variants depending on architecture, purpose and licensing model. Broadly speaking, there are closed, commercial models and open-source models.

Closed models are offered as cloud services by large technology companies and are generally not freely downloadable. They are continuously updated and often provide high performance, broad multimodal capabilities and integrated safety layers. Open-source models are made publicly available so organisations can run and adapt them on their own infrastructure. The performance of open-source models has improved rapidly in recent years, making them equivalent or sufficient for many business applications.

Besides text-based LLMs, there are multimodal variants that can process images, audio or video in addition to text. Such models can, for instance, analyse and describe an image, or turn a text prompt into an image or video sequence. The underlying principles largely remain the same, but the inputs and internal representations are extended to multiple modalities.

Advantages

LLMs offer several advantages over traditional approaches to natural-language processing. A key benefit is generalisation. By training on highly diverse data, LLMs can perform tasks that are not explicitly programmed but implicitly learned from the data. This enables so-called zero-shot and few-shot learning, where the model can produce meaningful output with little or no task-specific examples.

LLMs can also unify multiple tasks within a single model. Whereas previously each task required a separate model—such as for sentiment analysis, translation or question answering—an LLM can handle all these tasks based on prompt instructions. This simplifies architectures and shortens development time.

Another advantage is flexibility in interaction. LLMs support natural language as an interface, allowing users to operate technical systems via ordinary language commands. This lowers the barrier for non-technical users and makes complex workflows more accessible.

Limitations

Despite their power, LLMs have clear limitations. A fundamental limitation is that they possess no explicit understanding of the world but operate as probabilistic pattern recognisers. This can lead to hallucinations—situations where the model produces convincing yet incorrect or fabricated information. Hallucinations are problematic in domains where accuracy is essential, such as medicine, law or finance.

Another limitation is the lack of up-to-date knowledge. LLMs are trained on data up to a certain point in time. Without integration with external data sources or periodic re-training, they do not know developments after that point, except through additional fine-tuning. Consequently, LLMs are often integrated with search mechanisms or knowledge bases to deliver current information.

LLMs can also exhibit bias because they learn from text that contains human prejudices or skewed representations. This can manifest as stereotypical or discriminatory output. Researchers and providers attempt to mitigate this with dataset curation, alignment procedures and filters, but complete neutrality or absence of bias is difficult to guarantee in practice.

LLMs are computationally intensive as well. Both training and inference require substantial hardware, often in the form of GPUs or other accelerators. Although optimisation techniques such as quantisation, distillation and model pruning reduce costs, scalability remains a key concern, particularly for high-throughput or low-latency applications.

Safety, ethics and governance

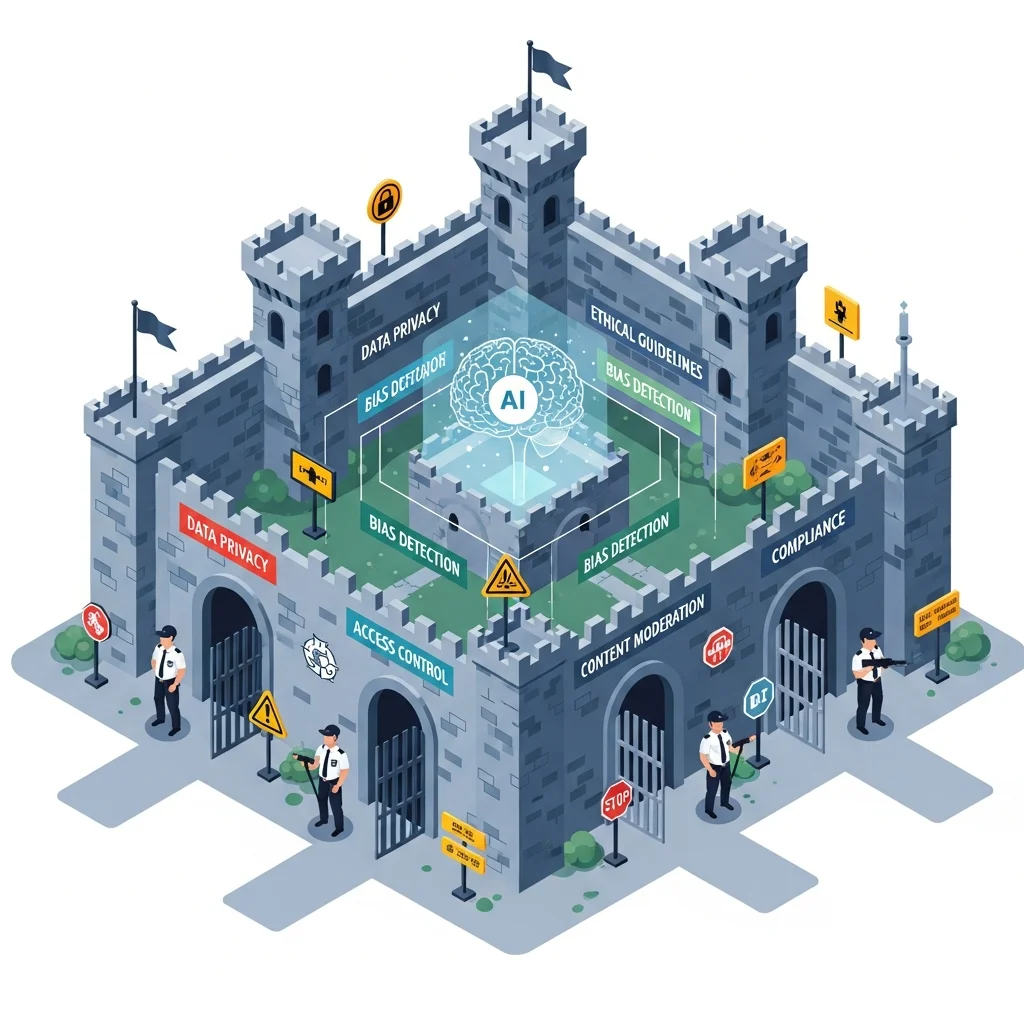

Deploying LLMs raises important questions about safety, ethics and governance. On the one hand, LLMs can be misused to produce misleading information, spam, phishing messages or advanced social-engineering attacks. On the other hand, LLMs may generate inappropriate, harmful or illegal content if not adequately safeguarded.

Organisations using LLMs therefore implement multiple safety layers. Examples include content filters on prompt and output, policies restricting certain topics, monitoring of usage patterns and technical measures to block, for instance, the generation of malware code or instructions for illegal activities. Guidelines for responsible use are also established, especially in high-risk sectors such as healthcare, legal services and critical infrastructure.

At the policy level, regulatory initiatives are increasing, such as legal frameworks for AI that require transparency, risk assessment and documentation. For LLMs this involves explainability, provenance of training data, privacy, copyright and mechanisms for complaints and redress. Governance around LLMs encompasses processes for assessment, approval, monitoring and incident response.

Techniques for improvement and control

Prompt engineering

Prompt engineering is the systematic design and structuring of input prompts to obtain better model output. Instead of a brief question, the prompt is enriched with context, instructions, examples and constraints. This can improve the accuracy, consistency and safety of answers without changing the underlying model. Examples include specifying role and style, explicitly requesting step-by-step reasoning, or imposing format rules—for example, for JSON output.

Retrieval-augmented generation

Retrieval-augmented generation, often abbreviated as RAG, combines an LLM with a search or storage system. First, based on the user's question, relevant documents are retrieved from a database, search engine or vector store. These documents are summarised and added as additional context to the prompt. The LLM then generates an answer that explicitly references these sources. In this way, knowledge remains up-to-date and the risk of hallucinations is reduced, provided the underlying sources are reliable.

Fine-tuning and adapter techniques

In addition to basic instruction tuning, LLMs are often further adapted for specific organisations or domains. This can be done through full fine-tuning, where all parameters are updated, or through parameter-efficient methods such as LoRA or adapters. These methods add extra layers or parameter blocks while keeping the original model largely frozen. This lowers computational costs and makes it possible to maintain multiple customised variants of the same base model.

Evaluation and benchmarks

The quality of LLMs is assessed using benchmarks and evaluation sets. These sets contain tasks such as multiple-choice questions, reasoning problems, translation or coding exercises. Outcomes are measured with metrics such as accuracy, F1 score or BLEU score, depending on the task-specific criterion. There are generic benchmark collections that cover different domains and difficulty levels, as well as domain-specific benchmarks, for example for legal or medical language.

In addition to quantitative benchmarks, human evaluations are conducted. Human reviewers assess the output on criteria such as correctness, coherence, style, usability and safety. Human evaluations are labour-intensive but important for measuring subtle quality aspects that are hard to capture in numerical metrics.

Use in business environments

In business contexts, LLMs are increasingly integrated into software architectures as a central language layer. They then act as an interpretation module between users, internal systems and data sources. Typical scenarios include automating customer service, supporting knowledge workers, generating business documents and optimising marketing and communication.

For production use within organisations, additional requirements come into play. These include data protection, logging and audit trail, management of model versions, monitoring of performance and latency, and integration with existing identity- and access-management systems. A hybrid approach is often chosen, combining generic commercial models with domain-specific open-source models or internal fine-tunes, depending on data sensitivity and the desired level of control.

In summary, large language models constitute a central building block in the current generation of AI applications. They offer broad possibilities for language- and knowledge-related tasks, but their limitations, risks and governance require conscious handling.