Google expands Gemini with new My Stuff hub for generated content

Image

Google introduces central hub for Gemini output

Google is rolling out a new My Stuff hub in the Gemini app, where users can manage all their Gemini-generated images, videos and Canvas creations in one place. The feature tackles a common frustration: tracking down and re-using AI content that is scattered across individual chats, projects or devices.

The update fits into a wider trend: major AI providers are not only making their models more powerful, but also easier to organise and manage within the daily workflow. For organisations experimenting with generative AI, it means that governance and oversight are increasingly supported by the platforms themselves.

Features of the My Stuff hub

In the My Stuff hub, Gemini automatically groups different kinds of output by content type. Users therefore get separate sections for, among other things, images, videos and Canvas creations, instead of everything being spread across individual conversations or prompts. From this central view content can be reused faster in new prompts or shared with other applications.

The integration with Gemini Canvas, Google's visual workspace, plays an important role here. In Canvas, users can combine whiteboard-style documents with text, diagrams and AI-generated elements. The new hub makes it easier to pick up earlier Canvas projects or reuse elements from them in other contexts.

In addition to visual content, text generations remain accessible, but the update clearly focuses on media that often become fragmented in professional workflows, such as visuals for presentations, social posts or product concepts.

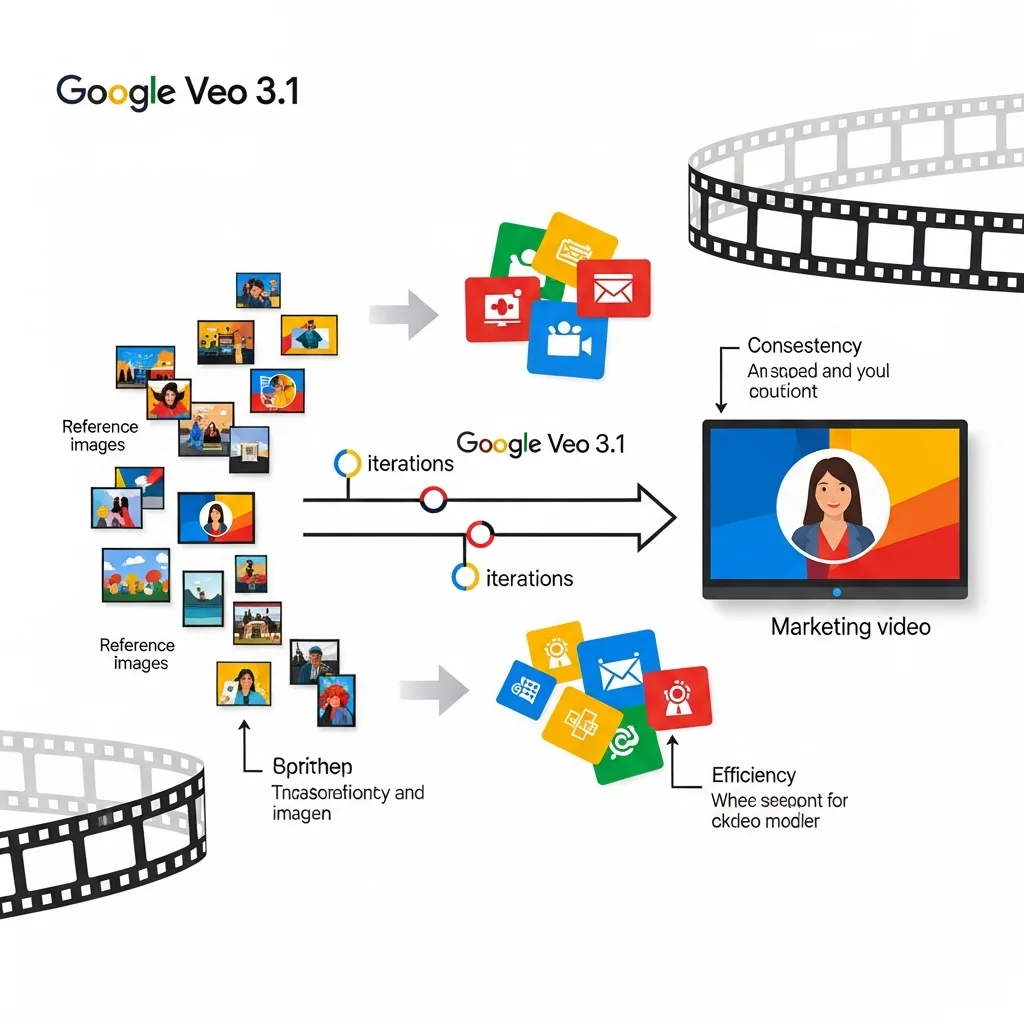

Role of Veo 3.1 in video and reference-guided content

Parallel to the introduction of the My Stuff hub, Google is investing in improved video capabilities through Veo 3.1. This video model is designed for reference-guided content, where users supply their own footage or style elements to make the output visually more consistent. Think of marketing teams that use brand assets, product shots or existing campaign imagery as a basis for new variants.

The combination of Veo 3.1 and a central My Stuff hub should speed up the iterative creation process. Instead of isolated experimental videos, a more manageable library emerges in which reference images, earlier versions and final output stay together. That makes it easier to produce A/B variants, retrieve older versions or reuse material in another campaign.

For developers and integrators it is especially interesting that Google is explicitly positioning these video features as part of the broader Gemini stack, where multimodal input and output converge. This underlines the move towards AI platforms that handle text, images and video in an integrated way, with a shared content layer as a foundation.

Focus on reuse, consistency and governance

With the My Stuff hub, Google addresses not only ease of use but also a growing organisational question: how do you keep control over the explosion of AI content within a team or company? Without central storage and clear structure, the origin of assets quickly becomes unclear, as does which version is used where.

find previous iterations as a reference during audits or quality checks

apply assets consistently across different channels because the same source files remain accessible

enforce internal guidelines for branding and tone more easily, because teams can quickly see which content has already been approved or successfully used

The update therefore aligns with a movement emerging in other AI platforms as well, where not just model capabilities but above all the tooling for lifecycle management of AI content takes centre stage. In environments that use AI intensively for marketing, document generation or product development, this represents a first step towards more mature content governance.

Relevance for companies working with Gemini

Companies already experimenting with Gemini in workflows for marketing, documentation or product design now have a concrete tool to streamline daily practice. Employees who previously relied on their own folder structures, screenshots or manual exports to store AI output can now find their material directly within the Gemini environment.

For organisations considering scaling up their use of generative AI, the announcement signals that major providers are taking the management of AI content more seriously. This can lower the threshold for deploying generative models structurally, because the risks around fragmentation, version control and findability are better mitigated by the tooling itself.

An additional effect is that integration with existing cloud environments and productivity tools becomes easier. Because Gemini output is organised more consistently, IT and developers can build links with document management, DAM systems or internal dashboards more purposefully, without first having to consolidate loose files from disparate sources.

Video and demonstrations

In international coverage of the update, Google mainly showcases short demonstrations that walk through the workflow with My Stuff and Veo 3.1. They show how users generate a series of images, retrieve them in the hub and then use them as the basis for new videos or presentation material.

An explanatory video for this update can be found on YouTube, among other places:

The combination of visual examples and a central content hub illustrates how generative AI is evolving from isolated experiments into an integrated part of the everyday digital workplace.