Japanese coastal city apologizes after AI bear alert, wake-up call for public safety

A Japanese coastal city caused nationwide uproar this week after issuing an official warning about a loose bear, based on a photo entirely created by generative AI. The incident is forcing governments worldwide to take a much sharper look at verification, protocols, and security around AI in control rooms and crisis communication.

The warning was distributed through official channels, telling residents to stay indoors due to a bear allegedly spotted near the coast. It later turned out that the image used by authorities as evidence was not a field photo, but an image entirely constructed by generative AI. The city has since publicly apologized.

What exactly went wrong

According to Japanese media, the AI image had ended up in an internal workflow as an illustrative example, after which it was mistakenly interpreted as actual evidence. The combination of time pressure, limited verification, and a lack of clear AI protocols meant that the warning went live without additional checks.

Notably, no additional verification based on source metadata or location data was performed. Elements such as EXIF data, GPS information, or independent source verification were not systematically checked, while this should increasingly be standard in modern control rooms and security chains for visual information.

The incident shows how vulnerable existing processes are when AI images and deepfakes enter government and emergency service systems without clear marking or checks.

Lessons for European municipalities and safety regions

For European municipalities and safety regions, this incident is a stark reminder that AI introduces not only opportunities but also new risks in the information flow to citizens.

Lack of AI source labeling and traceability Many generative image systems offer capabilities for watermarks, provenance metadata, or cryptographic signatures, but in practice these are often not mandatory or activated by default. Without clear labeling, an AI image can easily end up as an authentic photo in an operational system.

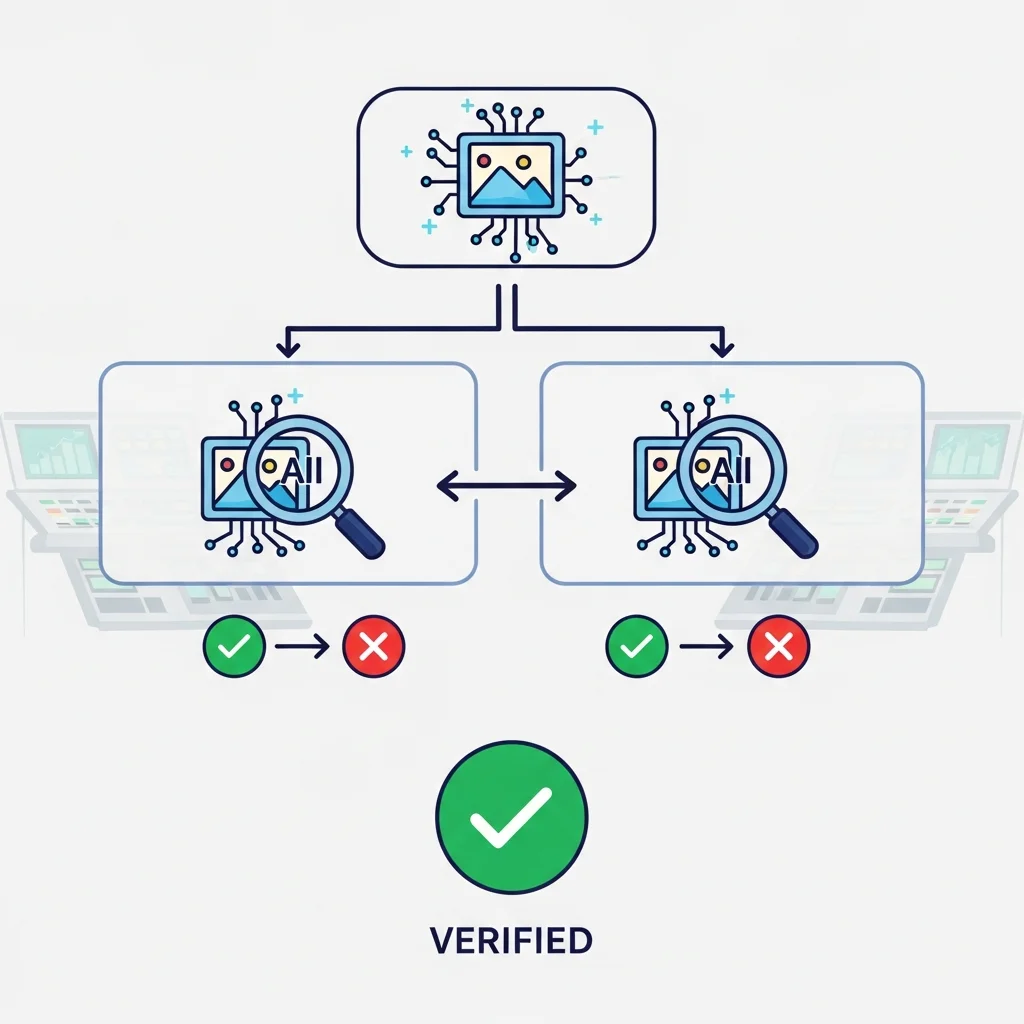

Insufficient two-layer control on visual information Many organizations have a four-eyes principle for decisions, but not explicitly for evidentiary images. Visuals are then considered neutral or self-evident, while AI can cause the most confusion precisely in this area.

Missing AI awareness among non-technical teams Local governments, police communications, communications departments, and control rooms increasingly work with digital channels, but AI training and awareness are lagging behind. Staff often do not recognize deepfake patterns, composition artifacts, or inconsistencies in light and perspective.

Combined, this paves the way for errors like in Japan, but also for malicious actors who use deepfake images to sow panic, influence policy, or conduct disinformation campaigns.

From incident to policy: which protocols are still missing

The incident in Japan directly touches on current discussions about AI governance, both in Europe and beyond. Within the EU AI Act and related regulations, the emphasis is on transparency, risk classification, and human oversight. Yet many concrete operational protocols at the level of municipalities, safety regions, and implementing organizations have not yet been developed.

Important gaps include:

Formal guidelines for the use of generative images in government communication In many countries, there are rules for privacy and archiving, but hardly any specific rules for AI-created images, especially not for emergency communication or public warnings.

Standard AI checklists for control rooms and press communications Think of mandatory questions such as: what is the source of this image, has technical verification been done, is it known whether an AI generator was used, and is alternative evidence available.

Technical tooling for automatic detection and labeling There are increasingly more tools that can recognize AI-generated images based on signatures or typical patterns, but implementation at local governments and emergency services remains limited.

The Japanese incident is expected to put new pressure on regulators and legislators to develop more concrete frameworks for this, especially now that generative AI models are rapidly improving and producing increasingly realistic images.

Consequences for public safety and trust

A false alarm about a loose bear may seem relatively harmless, but the implications for public safety are significant. If citizens are more frequently confronted with reports that later turn out to be incorrect, trust in official warnings can quickly erode.

In case of real danger, a warning may be taken less seriously because people remember previous errors.

In doubtful cases, confusion can arise, especially if social media spreads alternative images or stories, which further complicates decision-making in crisis situations.

Moreover, the incident shows how easily AI systems can play a role in the safety chain, even if no conscious choice has been made to deploy AI. A single incorrectly stored generative image or a loose illustration in an internal document can ultimately result in a public warning with societal impact.

Relevance for AI developers and providers

For developers of generative models and AI platforms, this incident is yet another signal that technical security measures are not optional, but an integral part of a responsible AI ecosystem.

Content provenance with cryptographic signatures that can prove an image was created by an AI model, including traceable information about the model and the tool used.

Visible and invisible watermarks that persist even with limited compression or editing, so AI detection tools can recognize them.

Built-in warnings and disclaimers on export, especially when images may be used in a high-impact context, such as news, politics, or public safety.

The incident in Japan will likely be cited in discussions between regulators, governments, and suppliers about the extent to which such measures should be mandatory for models available to broad public audiences.

European context: AI, deepfakes, and public sector

In Europe, various countries are working on guidelines for the use of generative AI in the public sector. Some countries have already drawn up internal policy documents that restrict the use of deepfakes and synthetic media in political campaigns or official communication.

Yet, a fine-grained approach for local governments is often lacking, where the combination of limited resources, high workload, and rapidly changing technology makes them extra vulnerable. The case of the Japanese coastal city provides a concrete case to sharpen existing guidelines.

Expected discussions in the coming period include:

Should all images used by governments in emergency communication demonstrably undergo authenticity checks?

What role will national and European CERTs and regulators get in rolling out standard AI verification tooling?

To what extent will AI suppliers be required to offer provenance and detection tools when their models are used in a public context?

Although there is currently no uniform European standard, pressure to establish clear rules is visibly increasing with these types of incidents.

Conclusion

The false bear alert in a Japanese coastal city seems at first glance a remarkable, almost anecdotal error. In reality, it exposes a fundamental vulnerability in the way governments and public organizations currently deal with AI-generated images.

Without clear protocols, technical safeguards, and broad awareness among operational teams, generative AI systems, even unintentionally, can lead to misinformation from official sources. For policymakers, regulators, and AI developers worldwide, this incident is therefore a clear warning: the integration of AI in public safety processes requires more than just innovation, it also requires strict governance, verifiable provenance, and robust control mechanisms.