MCP and API Data

Introduction

Model Context Protocol (MCP) is an open protocol for connecting large language model applications to external data and tools through a standardised interface.

MCP's goal is to enable AI assistants and agents to communicate with data sources, APIs, files and other systems in a uniform way. Instead of writing a bespoke integration for every connection, MCP provides a generic layer on top of which AI applications can operate.

Within the MCP context people often speak of API data, because in practice the protocol relies on existing data-source APIs. MCP does not prescribe how a system's internal API should look; rather, it describes how an AI application can discover an MCP server, which functionality is available, which resources can be queried and how tools can be invoked securely.

This article explains the basic concepts, architecture and data flows of MCP, and shows how API data is exposed to AI models within this architecture.

Definition of MCP

MCP, short for Model Context Protocol, is an open protocol based on JSON-RPC 2.0 that provides a standard way to expose context and functionality from external systems to LLM applications. In essence, MCP:

uses a client–server model to exchange context data and tool functionality

defines JSON-based messages for discovery, lifecycle and tool execution

specifies a set of features such as resources, prompts and tools, including extensions like sampling, roots and elicitation

MCP itself is agnostic about which LLM is used. The protocol does not dictate how the model is controlled, only how host, client and server structure context and actions between them.

Architecture and components

Participants in MCP

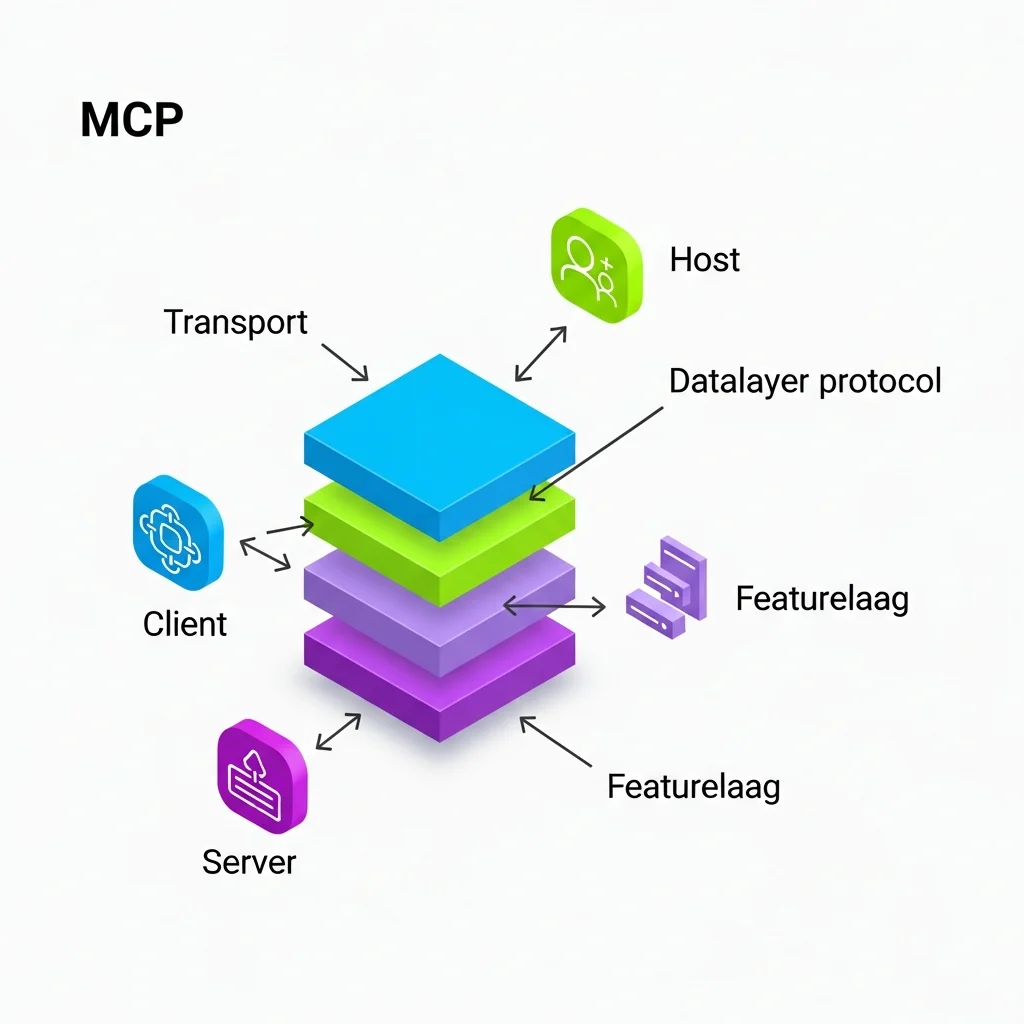

The MCP architecture defines three main roles that together determine the data flow.

MCP host The host is the AI application that orchestrates operations, for example a desktop application, IDE plug-in or chat interface. The host launches MCP clients, maintains connections and presents the results to the user or to the underlying model.

MCP client A client is a component within the host that manages a one-to-one connection with a specific MCP server. The client implements the core MCP rules, sends requests, receives responses and handles the translation to the host environment.

MCP server The server is a program that exposes context data and tools to MCP clients. The server can run locally (for example via STDIO on the same machine) or remotely, such as a web service reachable over a transport layer like WebSockets or another stream.

A host can contain multiple clients, and each client is linked to exactly one server. This allows an AI application to connect to several external systems simultaneously, each through its own MCP server.

Layers in the architecture

The MCP architecture can be divided into three conceptual layers.

Transport layer This layer provides the physical connection between client and server. Examples include STDIO for local servers or network-based transports for remote servers. The protocol does not mandate a specific transport technology, but assumes a bidirectional channel.

Data-layer protocol In the data layer the JSON-RPC 2.0 messages, methods and parameters are defined for discovery, tool execution, resource access and notifications. Here servers announce their capabilities and clients formulate requests.

Feature layer On top of that lie MCP features such as resources, tools and prompts, plus optional client features like sampling and roots. These features describe at a higher level which kinds of data and actions are available.

Core features: resources, prompts and tools

Resources

Resources are representations of data or context that a server makes available. Examples include product catalogues, log files, documents, CRM records or events in a data platform. Each resource has metadata such as a name, type and a URI or path through which it can be accessed.

Via the MCP data protocol a client can request a list of available resources, fetch details about a specific resource and retrieve the content or part of the content. How this happens under the hood is up to the server, usually by issuing a query to an internal API or database. The host only sees the structured JSON response.

Prompts

Prompts in MCP are reusable prompt templates or workflows offered by the server. They are essentially predefined interaction patterns that the host can present to the user or to a model. A prompt can contain variables and may reference specific resources or tools.

By exposing prompts through MCP, server developers can package domain-specific prompt logic. The client can fetch these prompts, fill them with concrete parameters and then use them in interactions with the LLM.

Tools

Tools are functions that the server exposes to perform actions, such as creating records, running a query, triggering a workflow or generating a report. Tools are described with a name, parameter schema and a description of their behaviour.

The client can discover tools via tool discovery and then send a tool execution request, including the required parameters. The server performs the action via its internal APIs or systems and returns a result object. The LLM can use these tools as 'actions' in a tool calling scenario.

Lifecycle of an MCP connection

Initialisation and discovery

When establishing a connection, the host starts an MCP client with the configuration for a specific server. The client then performs an initialisation exchange during which client and server:

exchange capabilities

announce available features and versions

request or supply optional configuration

After this step, the client knows which resources, prompts and tools are available and which messages the server supports. This is usually stored in internal data structures so the LLM can 'see' these capabilities through the host.

Tool discovery

Tool discovery is the process whereby the server tells the client which tools exist, which parameters they accept and what their semantics are. This happens via JSON-RPC messages that return a structured list of tools.

make the toolset visible in the interface

pass the schema to the LLM so the model knows which tool names and parameters it may use

build tooling that sorts, filters or annotates tools

When the LLM decides to invoke a tool, the host translates this intent into an MCP tool execution request. This message contains at least:

Tool execution

the name or identifier of the tool

the parameter values, usually as a JSON object

a context or correlation ID so responses can be linked

The server carries out the internal action, typically via an API call to an existing system, and then sends back an execution result. The client passes that result to the host, which then presents it to the model as tool output.

Notifications

MCP also supports notifications, allowing servers to signal changes in the list of tools or resources, for example. This enables dynamic environments in which servers can add or remove capabilities at runtime. Clients respond by updating their local representation of the server capabilities.

API data in MCP

Role of APIs

MCP doesn't specify how an underlying system implements its API, but assumes the MCP server has access to a structured data layer, usually through a REST, GraphQL or other API. In practice, the MCP server acts as an adapter between an existing API and the MCP world.

a database or SaaS application with an existing REST API

a data platform such as an event store or KQL database with a query API

internal business services with their own endpoints

The MCP server translates a resource request or tool execution into one or more API calls, processes the response and returns it in MCP format.

Structuring API data

API data is usually represented as JSON within MCP, with the server responsible for:

mapping API fields to MCP resource or tool result fields

optionally anonymising or filtering data for privacy or security

adding metadata such as timestamps, IDs or type information

This abstraction ensures that the host and the model are not dependent on the exact structure of the underlying API. The only fixed element is the MCP contract described in the server description.

Example scenarios with API data

Some common scenarios where MCP and API data go hand in hand:

Accounting software where an MCP server is linked to a SaaS API, enabling a chatbot to create new customers or invoices in natural language

Product databases where an MCP server uses API endpoints to fetch product information and let an AI agent answer natural-language questions about it

Realtime data platforms where an MCP server runs queries on event houses or KQL databases and returns the results in JSON to the AI client

In all these cases MCP acts as the standard layer that governs how the AI application communicates with the API data, not how the data is stored internally.

Security and privacy

User control and consent

Because MCP grants access to potentially sensitive data and powerful tools, security is a core part of the specification. Key principles are:

explicit user consent for accessing data and performing actions

transparency about which servers are connected and which tools are available

clear user interfaces for authorising or rejecting actions

The host must prevent tool execution or data delivery from happening without the user being aware of it.

Data privacy

MCP host implementations should handle the data offered by servers with care. This includes:

only passing resource data to models or other systems with consent

applying access control and scoping so servers do not see more data than necessary

minimising storage of sensitive data in logs or caches

Servers, in turn, usually implement their own authentication and authorisation towards the underlying APIs, for example via tokens, IP restrictions or identity providers.

Tools and code execution

Tools may involve executing arbitrary code or mutating data. MCP therefore treats tool descriptions as untrusted input unless they come from a trusted server. Hosts are expected to:

never execute tools automatically without explicit user consent

provide clear explanations of what a tool does before it is invoked

use logging and error reporting for auditing and debugging

This reduces the risk of abuse or unwanted actions that could occur through a tool execution.

Implementation and SDKs

Language-specific SDKs

To simplify implementation, official SDKs exist for multiple programming languages. These SDKs:

hide the details of JSON-RPC communication

offer helper functions for server and client lifecycle

contain types and schemas for the MCP features

With these, developers can quickly spin up a new MCP server that exposes data via existing APIs, or build a client into an application such as an IDE or desktop app.

Reference servers and tooling

Alongside SDKs there are reference implementations of MCP servers for commonly used systems, for example:

popular file-storage services

development tools and repositories

database platforms

There is also tooling such as inspectors that lets developers test whether their server complies with the specification and what the responses look like.

Use in enterprise and realtime environments

MCP is increasingly integrated into enterprise environments and data platforms. Some solutions ship a full MCP server tailored to one platform, such as a realtime intelligence environment or a data explorer service. This server translates MCP tool requests directly into queries against event houses or other data sources and returns results to AI agents within seconds.

This architecture creates a modular and scalable way to give AI models access to operational and historical data, without every AI automation having to develop a full API integration from scratch.

Relationship to other protocols

MCP is often compared with the Language Server Protocol because both define a generic protocol to connect a family of tools to different hosts. Where the Language Server Protocol focuses on programming languages and development tools, MCP focuses on data and actions for AI applications. The idea of a universal connector acting as a "USB-C for AI" is similar in both cases, but the substantive focus differs.

Summary

MCP and API data together form a generic integration framework for AI applications. MCP defines an open standard for:

how AI hosts communicate with servers via clients

how servers expose resources, prompts and tools

how JSON-based data and tool results are exchanged

The actual data usually comes from existing APIs of data sources or applications. The MCP server acts as an adapter that translates this API data into MCP messages. As a result, LLM applications can access a wide variety of systems in a uniform way, while security, privacy and user control are structurally embedded in the design.