Ever wondered which model really moves the needle in your codebase today? In this hands-on review of the latest releases I pit GPT-5 against Claude 4 Sonnet, focusing on real-world coding, agentic workflows and developer experience.

Speed is nothing without control

So what should you choose?

The gist: benchmark power vs team productivity

Baseline performance GPT-5 sets the pace with 74.9% on SWE-bench Verified; Claude 4 Sonnet logs 72.7% (both without extra test-time compute). In practice the gap feels small but noticeable on complex bug-fixes with many dependencies.

Extended thinking Sonnet 4 stretches to 80.2% with parallel test-time compute; GPT-5 claims SOTA on real-world coding and scores 94.6% on AIME 2025 without tools. Short version: Sonnet maximises with compute, GPT-5 excels at first-pass reasoning.

Agentic tooling Claude 4 brings extended thinking with tool use and parallel tool calls; Claude Code integrates tightly with VS Code and JetBrains. GPT-5 moves towards a unified agents-and-tools model in ChatGPT and the API. Both are ready for longer, multi-step tasks.

Developer experience From our own experience: Sonnet 4 is more conservative with edits (sharper, "surgical" patches); GPT-5 undertakes larger refactors with more context. Which one you want depends on your risk appetite, test coverage and CI speed.

My first week with both models

What stands out in day-to-day use

I recently hit an interesting case: the same issue, two styles. GPT-5 went for the strategic fix including a helper restructuring; Sonnet 4 kept it minimalist and touched fewer files. Both succeeded, but the review experience was totally different. The choice is not only about "best benchmark" but about team flow, tooling and your patch-acceptance criteria.

Key takeaways:

GPT-5 leads on real-world coding benchmarks and excels in mathematical reasoning; great for architectural choices and bigger-picture fixes.

Claude 4 Sonnet scores close on first-pass and can top the chart with extended compute; IDE integration and patch precision are strong.

Agentic workflows are maturing: parallel tool use, memory and longer journeys. This changes how we let code "live".

For Laravel/monorepos patch precision is gold; for greenfield or large-scale refactors GPT-5's more aggressive style can be quicker.

Actionable insights:

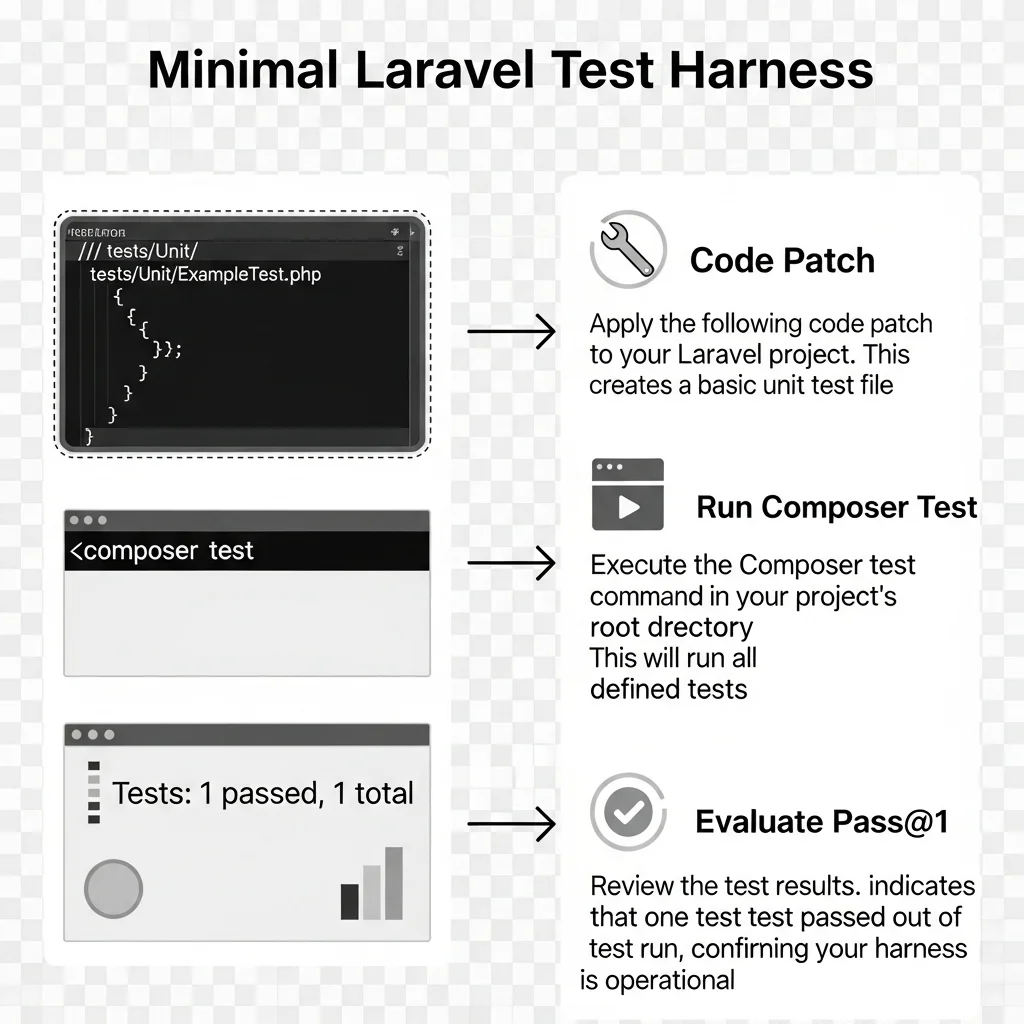

Set up a SWE-bench-style harness for your own codebase.

Measure pass@1 on your regression tests instead of trusting public benchmarks.

Start with Sonnet 4 in stricter CI, GPT-5 in exploratory branches.

Reserve extended compute for tricky issues; set caps to keep an eye on cost and runtime. 🙂

Map your code work

Our approach: label issues as "surgical fix" (one file, one bug), "guided refactor" (multiple modules) and "exploratory design" (architecture).

Tip: link labels to model choice. Sonnet 4 for surgical, GPT-5 for guided/exploratory.

Build a local SWE harness

What we do: each proposal is a patch applied to a clean checkout, then we run `composer test` or `phpunit` (Laravel) and E2E where relevant.

Pitfall: don’t let the model rewrite test files. Set `tests/` to read-only or validate diffs.

Tune prompts per task type

Pro-tip: for Sonnet 4 ask for "minimal diff, no renames, explain each edit"; for GPT-5 ask for "tactical plan, check side-effects in modules X/Y".

Most important: add repo-specific constraints (coding standards, domain invariants, migration policy).

Activate tools deliberately

Use parallel tool calls only where it really pays off (dependency analysis, migrations, performance profiling).

Handy trick: give the model a "readme-context" file with project rules and "do-not-touch" zones.

Review and learn

Log all patches, test results and revert reasons. Maintain a small "memory file" with patterns that frequently break in your codebase.

Hold a model-agnostic retro every week. Which brought fewer regressions? Where was extended thinking necessary?

Curious how this plays out in your stack? Share your context (framework, repo size, test coverage) and let’s brainstorm a smart setup. Keen on a lightning eval with your CI? Drop me a message—always happy to help. 🚀

What do the latest releases really tell us?

From benchmark to daily practice

The short version of the announcements

OpenAI introduced GPT-5 as the new flagship with SOTA on real-world coding: 74.9% on SWE-bench Verified, plus 94.6% on AIME 2025 without tools. The message: strong reasoning, strong coding, agentic-ready.

Anthropic launched Claude 4 with Sonnet 4 and Opus 4; Sonnet 4 clocks 72.7% on SWE-bench Verified (pass@1) and can hit 80.2% with parallel test-time compute. Plus: extended thinking with tool use, parallel tool calls and Claude Code plugins for VS Code and JetBrains.

Fresh off the press: Opus 4.1 lifts SWE-bench Verified to 74.5%—relevant if you chase the absolute top, but the question remains: how often do you need that extra 1-2% in your own CI?

What strikes me: benchmarks are converging. The playing field is less about scoring "one point higher" and more about:

How precise are the edits? Less collateral damage wins in large PHP/Laravel monorepos.

How well does the model stay on track in multi-turn, tool-augmented workflows?

How smooth is the integration with your existing IDE, test harness and deployment pipeline?

Sonnet 4 in a nutshell

Strong at surgical edits: "do one thing and do it well".

Extended thinking + tool use feels mature: parallel calls save human orchestration.

IDE integration lowers friction: diff review inside your editor, less context switching.

GPT-5 in a nutshell

Ambitious refactors with a planned rationale: handy on legacy sections where structure must come first.

Robust reasoning gives better "why" explanations—great for code review and knowledge transfer.

Agentic thinking heads towards uniformity: one reasoning system combining tools and memory. Fewer "which mode am I in?" questions.

Honest note: without good test coverage you’ll shoot yourself in the foot faster with either model. Benchmarks measure "solution", not "impact on your refactor debt".

How we test this in a Laravel context

Small harness, big confidence

Why build your own harness?

Public scores are useful, but your codebase is unique: custom helpers, domain rules, migration policy. That’s why we measure pass@1 on your regression and E2E tests. No magic—just discipline.

Minimal patch runner (sketch)

import subprocess, json, pathlib, difflib

def apply_patch(repo_path, patch):

for file, new_content in patch["files"].items():

p = pathlib.Path(repo_path, file)

old = p.read_text(encoding="utf-8")

if "tests/" in file:

raise RuntimeError("Tests are read-only in this flow")

p.write_text(new_content, encoding="utf-8")

print("Diff:", "\n".join(difflib.unified_diff(old.splitlines(), new_content.splitlines(), lineterm="")))

def run_tests(repo_path):

r = subprocess.run(["composer", "test"], cwd=repo_path, capture_output=True, text=True)

print(r.stdout)

return r.returncode == 0

def evaluate(model, issue):

patch = model.propose_patch(issue) # your own wrapper for GPT-5 or Sonnet 4

apply_patch("/work/repo", patch)

return run_tests("/work/repo")

Pro-tip:

Use "dry-run patches" to view diffs first.

Have the model write an "impact assessment": which modules are affected, why it chose them, risks.

Guard patch size: above a certain diff size always do a manual review.

Prompting that works

Sonnet 4: "change as little as possible", "avoid renames", "no silent design changes".

GPT-5: "create a micro-plan", "state which invariants you enforce", "check usage of helper X in module Y".

Business impact: where do you gain time and quality?

From BPR to developer happiness

Where does the ROI show up in practice?

Fewer regressions through conservative patches (Sonnet 4) in sensitive legacy areas.

Faster product improvement via larger, well-motivated refactors (GPT-5) in strategic spots.

Agentic workflows that finish epics in days instead of weeks—provided your orchestration and observability are solid.

Governance and security

Use sandboxed code execution for experiments; never let secrets leak in prompts or tool logs.

Protect "do-not-touch" zones via architectural rules (e.g. domain layer, security middleware).

Log thought traces concisely (summaries are often enough) and archive diffs and test output for auditability.

Team dynamics

Junior developers benefit from Claude Code's instant IDE feedback; seniors leverage GPT-5 for design discussions and larger restructurings.

Make pairing between human and model explicit: who leads? When do we switch?

Measure developer happiness. Less context switching and less diff noise is an underrated productivity factor. 🙂

Is GPT-5 "better" than Claude 4 Sonnet for coding?

Short answer: it depends on your use case. GPT-5 holds the higher SOTA score on real-world coding (74.9% SWE-bench Verified) and shines in reasoning. Sonnet 4 is close behind and can rank higher with parallel compute (80.2%). From our experience: Sonnet is more precise for small, safe edits; GPT-5 more readily tackles larger refactors.

How do extended thinking and parallel test-time compute relate to cost and time?

Long answer: extended thinking + parallel sampling boosts scores but increases runtime and compute. We use it selectively: only for tickets with high uncertainty or big impact. Set caps, log variants and avoid reruns if the first patch is already green.

What do you observe in Laravel projects?

From our experience: Sonnet 4 cuts "unintended side effects" in service and helper layers because it is less inclined to rename or broad-sweep refactor. GPT-5 is strong when you first need order—think removing duplicate logic and tightening boundaries. Both gain a lot with solid test coverage.

Which setup do you recommend for a monorepo with mixed stacks?

Start with a model router: Sonnet 4 for surgical tickets and hotfixes, GPT-5 for design or refactor stories. Give every patch an identical harness run. Only the best-scoring proposal moves to review. Keep "cross-stack" context handy (architecture rules in a "context.md").

Is Opus 4.1 more relevant than Sonnet 4?

Opus 4.1 gives a small boost on SWE-bench Verified (74.5%). If you’re really on the edge or running heavy agentic tracks that can count. For many teams Sonnet 4’s balance of speed, precision and IDE integration is very attractive. We test Opus 4.1 specifically on long-running tasks.

How do I safely handle tools and memory?

Use sandboxed execution, mask secrets and specify exactly what the model may read/write. Store "memory files" in a controlled folder; clearly separate temporary notes from persistent knowledge. Logging: summaries instead of full thought dumps help performance and privacy. 🔐

Which prompts work best for fewer regressions?

Ask for: "minimal diff", "do not change test files", "impact assessment per change" and "rollback plan if X fails". Add code style and domain invariants. With GPT-5 a brief plan upfront helps; with Sonnet 4 tight edit instructions help.

How do I get started without rebuilding everything?

Set up a small "eval lane" in your CI. Test 10-20 representative issues, measure pass@1 and review time. Choose a preferred model per issue type. Scale slowly, document learnings and enforce governance. Small steps, big wins. 🚀