More and more organisations are embracing generative AI, yet stumble over a monotonous or impersonal voice. In this blog I take you through the art of prompt optimisation: smart experimentation, measurement and fine-tuning until the output sounds as if it came straight from your content team.

Have you ever got an AI text back that felt like it was written by a robot?

Why the right prompt makes all the difference

Tone of voice goes far beyond friendly emojis; it touches your brand identity

Prompt optimisation is an iterative process of context, role description and review loops

New tools such as OpenAI's GPT-4o and Anthropic's Claude 4 allow for deeper system instructions

Real-world examples from our own dev sprints show how small tweaks yield 30–40 % quality gains

Action points in this blog:

Concrete five-step plan for prompt design

“Tone-fit” checklist with measurable criteria

Sample prompts + code snippet for automation

Pitfalls and pro tips from the field

What makes prompts powerful?

Balancing context, role and feedback

Role definition Specify exactly who the AI is: “You are the copy-writer for a sustainable sports brand with a playful tone.”

Style anchor Add short examples (“This is how our micro-copy sounds…”) and let the AI reflect in its own words.

Iteration loops Use scoring prompts or embeddings to label output automatically for tone fit and clear structure.

Automation Integrate prompts into your CI/CD pipeline with quick tests so every deploy safeguards the right voice.

Five-step plan for prompt design

Analyse your current voice Gather blog posts, socials and product pages. We drop everything into an embeddings model and cluster by sentiment, jargon and sentence length.

Build a system prompt We start with a persona, goal and constraints. For example:

SYSTEM: You are Sparky, the energetic yet knowledgeable voice of Spartner...

Design example dialogues Add two to three mini conversations that showcase the desired tone with crystal clarity.

Measure and refine Have testers (or a scoring LLM) rate the output on a 1-5 scale for personality, clarity, accuracy. Adjust the prompt, repeat.

Automate in your workflow Our approach: a GitHub Action triggers a PyTest that runs sample prompts through the API and enforces a score threshold. If it fails → the build breaks 🚧.

Why tone of voice so often goes wrong

AI understands nuance, but you have to explain it

When a marketer asks “Write a cheerful LinkedIn post about our release”, essential DNA is missing: target audience, emotion, brand values. The model guesses. What do I see in practice? The broader the brief, the flatter the tone. That’s why I always supply three layers of context:

Organisation essence – the core of mission and values

Persona & emotion – how do you want the reader to feel?

Conversation setting – platform, length, goal (inform, inspire, convert)

"Garbage in, garbage out" is still the number-one law.

Get this right and magic moments happen

Recently I found that a simple addition – “make it playful yet credible, think of Intercom’s product-update style” – lifted click-through rate by 18 % in an A/B test.

Pitfall: over-engineering

Prompts that are too long quickly become diffuse. Our sweet spot? 100–150 clear tokens across the system and user prompts combined.

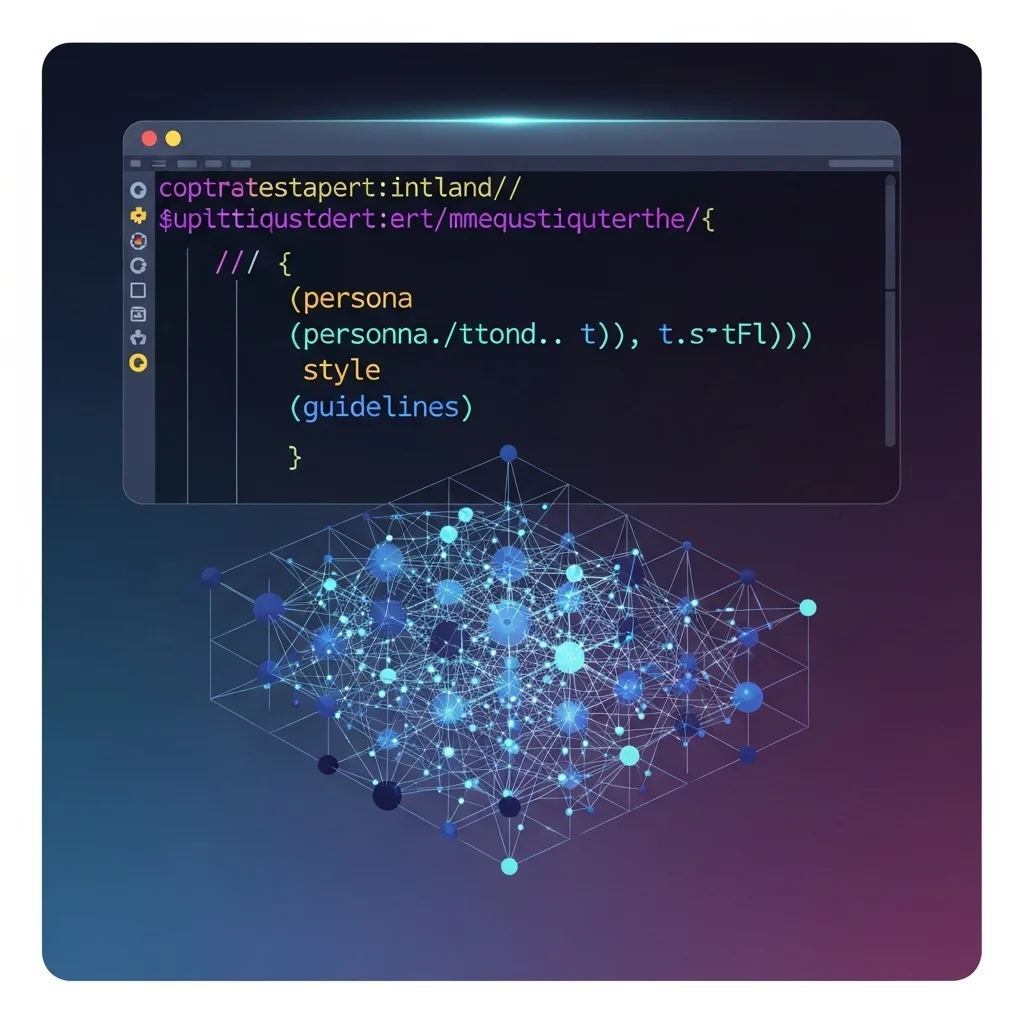

The technical layer: prompt templates & embeddings

How we automate it (without copy-pasting every time)

import os, openai

template = """\

SYSTEM:

You are {persona}.

Write in the style: {style}.

Keep in mind: {guidelines}.

USER:

{task}

"""

def generate(task, persona, style, guidelines):

prompt = template.format(

persona=persona,

style=style,

guidelines=guidelines,

task=task

)

resp = openai.ChatCompletion.create(

model="gpt-4o-mini",

messages=[{"role":"system","content":prompt}]

)

return resp.choices[0].message["content"]

# Example

print(generate(

"Write an intro for our quarterly update.",

"the cheerful yet knowledgeable voice of Spartner",

"short sentences, Dutch humour, light on jargon",

"no exaggeration, max 120 words"

))

What’s interesting here: we inject variables from a CMS so marketing only fills in parameters. The script then checks via our embeddings service whether the output scores > 0.85 cosine similarity with existing brand copy.

New features in GPT-4o & Claude 4

Steerable attributes: direct API parameters for formality and emotion 💡

JSON mode: guaranteed structure, allowing you to attach and validate tone labels

Multimodal context: upload screenshots or brand guides for visual cues – handy for design-heavy brands

Business perspective: the ROI of consistent AI content

From isolated experiments to a scalable content factory

I also notice that CMOs mainly care about numbers. So we measure:

Copy-writing time saved: –47 % in sprint 14 compared with manual work

Landing-page conversion uplift: +9 % thanks to sharper brand language

Feedback loops: 3× faster iteration through automatically reported tone deviations

What I see in board meetings: once you prove quality doesn’t suffer under automation, the budget gates open. Prompt optimisation is therefore not a “nice trick” but a serious capability within your content supply chain.

Pro tip

Link the output directly to a review workflow in Notion or Jira. Every paragraph gets a “👍 / 🔄 / 🛑” label from an editor. The status feeds our training dashboard, giving us an objective view of where the prompt still needs fine-tuning.

Curious how your brand voice sounds through an AI megaphone? 😃 Share your experiences below or drop me a DM. Let’s chat about prompts, embeddings and automation – and discover together how to take your content to the next level.

What’s the difference between a system prompt and a user prompt?

A system prompt defines the AI’s identity and ground rules. The user prompt is the specific task. In our experience, separating the two works like a style firewall: the identity stays intact regardless of changing tasks.

How often should you update your prompt?

At least once per sprint. New model releases (like GPT-4o or Claude 4) add extra parameters and may respond differently. Always retest and version your prompts just like code.

Can I capture multiple tones of voice in one model? 🙂

Yes, but be explicit. Use a {voice} variable in your template and add dedicated examples for each voice. That way the AI won’t produce an average, faceless mix.

How do I measure tone consistency objectively?

We use embeddings: compare the vector of new output with a corpus that scores 100 % brand fit. If cosine similarity drops below 0.8, we flag it as “deviation” and adjust.

Will prompt engineering soon become obsolete because of fine-tuning?

Not entirely. Fine-tuning provides domain knowledge, but tone is often situational. Prompt engineering remains the quickest way to give context-specific instructions without training new weights each time.

Are there risks in over-restricting prompts?

Absolutely. Too many rules can strangle creativity or lead to prompt fatigue. Keep a balance: hard no-gos, but leave room for unexpected angles.

Does this also work for non-text content like audio or video scripts?

Definitely! With multimodal models you can use the same technique. Add visual references (“use this colour combination”) or audio snippets for intonation guidance.

How do I start small on a tight budget?

Begin with a spreadsheet and an API key. Collect ten existing brand texts, write a system prompt, and call the API via a simple Python script. Iterate, measure, scale. 🚀