Artificial intelligence (AI) is advancing at breakneck speed. New models such as GPT-5 Turbo, Gemini 2.5 and Claude Sonnet 4.5 appear every week, and the possibilities seem endless. In this article you will discover how the technology works, which opportunities and risks are in play, and where switched-on organisations are already focusing their efforts.

Have you ever wondered why AI suddenly pops up everywhere?

Key takeaways

After years of promise, 2025 is the year AI moves from experimental to mainstream. The latest generation of foundation models, such as GPT-5 Turbo and Gemini 2.5, crank out flawless code, multilingual content and even real-time video. At the same time, open-source initiatives (Llama 4, DeepSeek) are accelerating the pace of innovation outside the big-tech giants. As a result, companies are uncovering new growth paths – and new risks.

Current insights:

- Biggest breakthrough: token price drops 40 % within six months

- Real-time multimodal input (speech, image, video) standard in SaaS tools

- Legislation (AI Act, GDPR) enforces better governance

Actionable insight

Understand the architecture of modern LLMs

Start small with a use case in marketing or customer service

Put model governance and data hygiene in place today

The lightning-fast evolution of AI models

OpenAI presented GPT-5 Turbo last week, a model that maintains a ten-million-token context window with no noticeable lag. Google's Gemini 2.5 immediately countered with native support for live video streams. Meanwhile, Anthropic is rolling out Claude Sonnet 4.5, with latency below 200 ms at 8 k context, while X-ai is publicly testing Grok 4 on the X platform. It feels like an arms race, but the real win lies in practical improvements: more consistent output, lower cost per 1 k tokens and stronger protection against prompt injections.

An interesting development: open source is catching up faster than ever. Meta's Llama 4 was trained on 15 T tokens and scores within 3 % of GPT-5 Turbo in open benchmarks. This lowers the barrier to running models on-premise, which risk-averse sectors in particular appreciate.

Business opportunities and risks for SMEs

Most small and medium-sized businesses start with generative content. Think marketing pages via https://spartner.nl/ai-content-maken or automated service-desk answers, similar to the use case at https://spartner.nl/ai-email-servicedesk-ontwikkelen. The payoff: 30-50 % shorter turnaround times for copy plus greater consistency in tone of voice.

That brings risks as well. Unintentional data leaks creep in quickly when sensitive customer data ends up in prompts. The recent Azure incidents (8 Oct) showed how legacy GPT-4 deployments inadvertently enabled data retention, as reported on the Azure OpenAI status page. Governance is therefore not a luxury. Modern middleware such as the Mind platform adds an auditable layer between user and model and stops PII from slipping out.

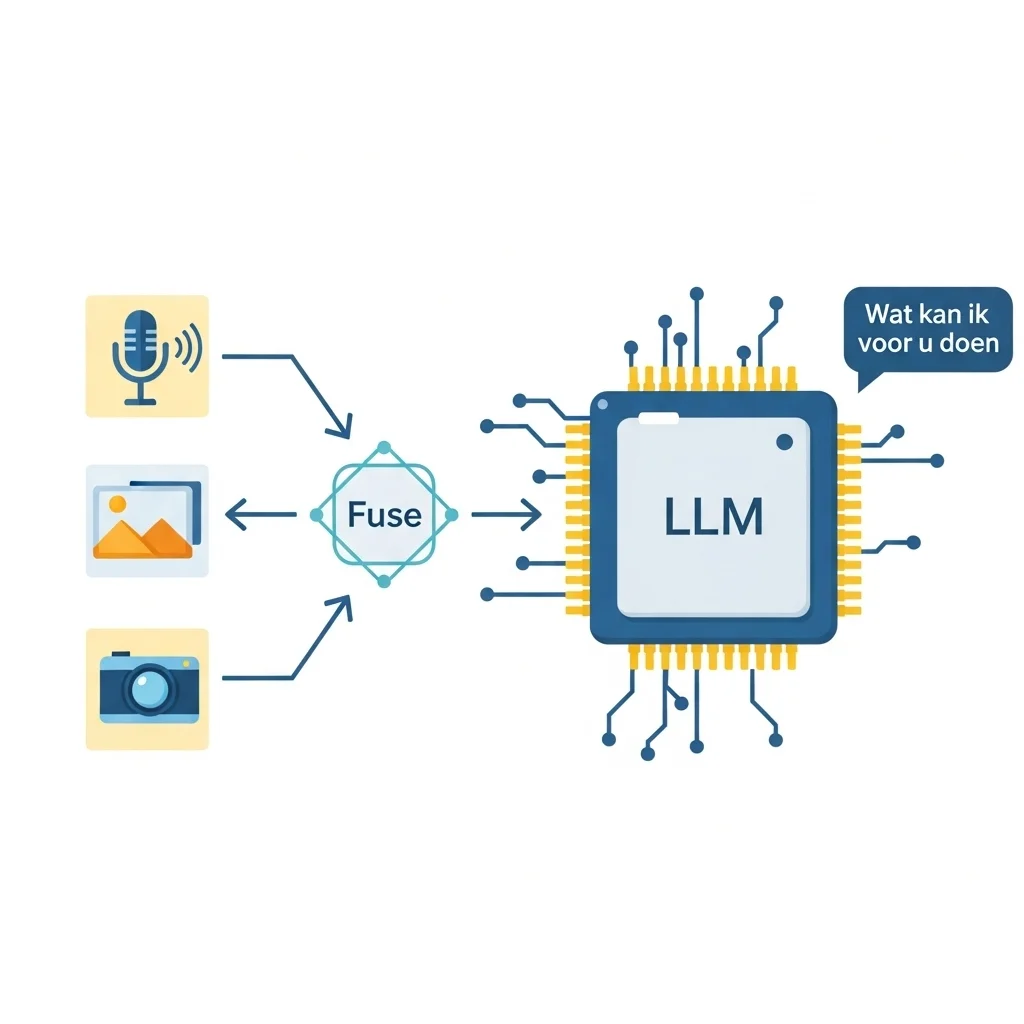

Technical basics: how an LLM works in 2025

To illustrate, here is a simplified pseudo-code flow of a multimodal prompt pipeline:

def generate_answer(audio, image, text_prompt):

speech_text = WhisperV4().transcribe(audio)

image_caption = GazeV3().describe(image)

fused_prompt = f"{speech_text}\n{image_caption}\n{text_prompt}"

response = GPT5Turbo().chat(

content=fused_prompt,

system="Respond in clear Dutch, cite sources."

)

return response

Pre-processing shifts to specialised models (Whisper V4, Gaze V3).

The LLM acts as the orchestrator at the higher semantic layer.

Output is post-filtered through a policy engine (for example OpenAI's Guardrails 2.0).

Governance and ethics, the next challenge

Law-makers are keeping pace. The final text of the European AI Act is expected in November and will ban 'unrestricted emotion recognition' and 'social scoring'. Organisations will soon have to track risk levels and document model updates. A practical approach is to log model versions in a Model Register alongside internal DPIAs. Platforms such as https://spartner.nl/grip-op-ai-governance show how companies can embed compliance measures without slowing down innovation.

Interestingly, technology itself provides the key. By combining Retrieval Augmented Generation (RAG) with rule-based filters, you can trace which source underpins an answer. That gives auditors grip and prevents hallucinated statements in tasks such as legal contract analysis.

What is the difference between GPT-5 Turbo and Gemini 2.5?

GPT-5 Turbo focuses on ultra-low latency and a ten-million-token context window. Gemini 2.5 puts the emphasis on native video and speech input.

Why are more and more companies opting for open-source models?

In our experience, open-source LLMs (Llama 4, DeepSeek) offer predictable costs and full data control. That is attractive for sectors where IP protection is critical.

How do you stop sensitive information leaking into the prompt?

A common practice is prompt pre-sanitising, combined with a middleware layer that masks sensitive entities. Tools such as the Mind platform apply standard PII filters 🙂

Is an AI project always expensive?

Not necessarily. By starting small with a single use case – for example automatic blog-post generation via https://spartner.nl/blog-ai-automatisering – initial budget and risk stay manageable.

How quickly do models become outdated?

Models are refreshed on average every three to six months. Thanks to a model-agnostic architecture the same application can run on GPT-5 Turbo today and on Claude Opus 4.1 tomorrow.

Which legislation is most relevant?

The European AI Act for high-risk applications and, of course, GDPR for all data processing. Both require transparency about model choices and data flows.

Can AI integrate with my existing systems?

Yes. Via API connections (REST, GraphQL) or direct database connectors an agent can add context. Spartner often pairs those integrations with Laravel-based back-ends so that custom code keeps running smoothly. 🚀

How do I get started with AI without wanting everything at once?

Pick a pain point with a quick ROI, set a measurable KPI and build a minimum viable prototype. Then optimise iteratively so support and budget keep pace.