Everyone is talking about them, yet few understand: modern AI agents are not chatty chatbots but digital colleagues who plan, decide and act. In this blog I unravel the misconception, showcase the latest building blocks and explain how we at Spartner use Spartner Mind to help organisations create their own agent ecosystem.

Have you wondered why so many 'agents' still feel like simple prompt-bots?

At a glance

AI agents combine brain, senses and action – just like a real employee.

Workflows are scripts; agents write the script on the fly.

10 fresh tools (open source & no-code) currently dominating the market.

Concrete step-by-step guide to launch your first agent tomorrow.

Actionable insight:

Agent ≠ Workflow

Thinking software in action

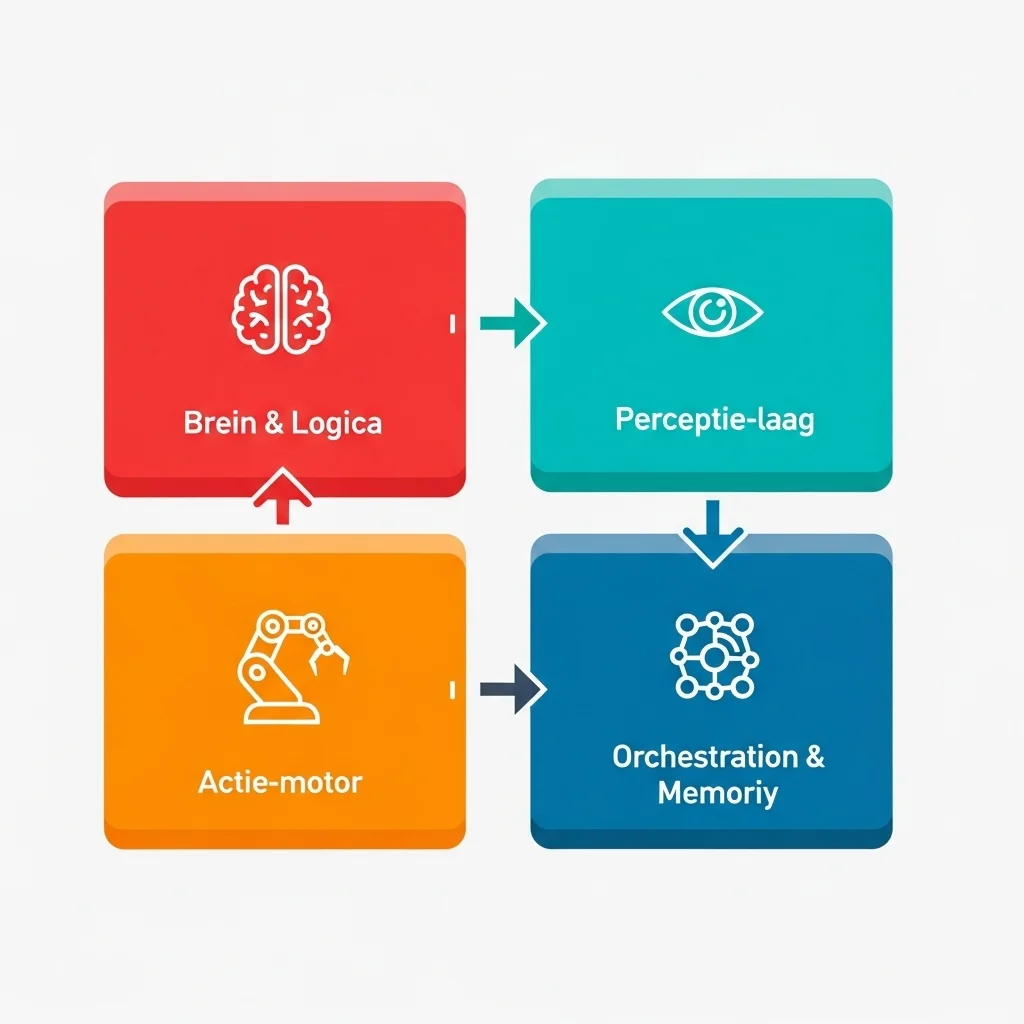

Brain & Logic An LLM plus rules forms the decision-maker. Through fine-tuning and system prompts you can steer behaviour just as you would onboard a new colleague.

Perception layer Tools, APIs and company data feed the model. RAG patterns, vector stores and real-time API calls make the difference between empty chatter and useful output.

Action engine From code execution to ticket creation: an agent without write-back remains half the job. This is often where the biggest integration pain sits.

Orchestration & Memory Conversation and task history prevent tunnel vision and costly re-computations. Think: LangGraph state or AutoGen conversation records.

Build a production-ready agent in 4 steps

Define one sharp goal What should the agent deliver within 10 minutes of runtime? We always start with a SMART user story – nothing fancy, just agile common sense.

Choose the right builder

Our rule of thumb: the more critical the process, the less no-code you want.

Integrate business context RAG your policies, price lists or code base so the brain knows the relevant facts. Pro tip: use as many structured sources (YAML/JSON) as possible to reduce hallucinations.

Monitor & iterate Log every conversation, measure latency and error rate, and retrain your prompts weekly. At Spartner we run this in Spartner Mind as "continuous prompt integration".

Curious how an agent can give your team back 10 hours a week? Drop me a message – I'm happy to spar 🤝.

Decoding the hype

Chatbot or digital colleague?

"You are not building a chatbot, but a colleague," Alex Young aptly wrote recently.

A workflow tool such as Zapier follows a pre-chewed chain: step A ➜ step B ➜ step C. An agent, however, decides itself whether step C should actually be step Z and fetches new data in the meantime. In our projects I often see a long Zapier chain replaced by one agent prompt plus three tool wrappers – maintenance drops by 70 %.

Component 1 – The brain

Modern brains often run on Claude 4-Sonnet, GPT-4o or the brand-new Grok 4. Each model has subtle differences in tool-use reasoning. Our tip: benchmark not only on token cost but on action accuracy – how often does the model choose the correct next step?

Component 2 – Perception

An agent is only as smart as its sensors. By linking LangGraph nodes to your CRM API, Kubernetes cluster or news feed the model gets a world view. Without that feed it keeps hallucinating.

Component 3 – Action

This is where the business value lies: sending emails, pushing code, booking invoices. Spartner Mind uses a Guarded Action Layer in which every external call first passes a rule engine. That prevents a hallucinating query from accidentally deleting all customer data.

Tooling overview 2025

The 10 hottest builders (week 34 release)

Open source

LangGraph 0.5: New streaming state graphs, ideal for multi-turn RAG flows.

AutoGen 2.1: Fully async, role chats and evaluation loops in Python.

CrewAI: Role templates + GUI; version 0.3.4 gained a built-in vector store.

OpenAI Swarm: Lightweight, one YAML file = entire agent. Perfect for hackathons.

Camel: Simulates multi-agent conversations for synthetic training data.

No-/Low-code

Vertex AI Builder: Direct integration with BigQuery & Looker.

Beam AI: Ready-made billing, compliance and extraction agents.

Copilot Studio Agent Builder: 1,200+ connectors – especially strong for internal operations.

Lyzr Agent Studio: Drag-and-drop memory modules; release 1.8 now lets you write custom RAG.

Glide: Focus on field operations; offline-first mobile UIs.

Key takeaway: open source gives you control, no-code gives you speed. Start where the most value lies – not where it merely sounds "cool".

Spartner Mind under the bonnet

How we simplify the agent landscape

Our clients do not want piles of YAML; they want results. That is why we built Spartner Mind:

Unified Tool Registry From SQL queries to Jira tickets – one standardised interface for the LLM brain.

Context Cache Real-time embeddings with automatic TTL so outdated info disappears.

Observability Dash Token spend, reasoning trees and tool calls on a single graph, letting you spot within minutes where an agent gets stuck.

Guardrails-as-Code YAML policies that block actions or require a human-in-the-loop.

By linking these layers we can have an MVP agent safely touching production data live in under two days.

Business cases that pay off now

From support to strategy

Automatic compliance check

An insurer had us scan policy texts with Vertex agents against the latest EU regulations. Time saved: six hours per file.

Code-review bot

We connected an agent to Jira and BitBucket. It checks pull requests for OWASP issues, tags reviewers and can suggest refactors. Error reduction: 30 %.

Market analysis on steroids

A large retailer uses a Grok 4-powered agent in Spartner Mind to calculate price changes from 50 competitors in real time. Decision-making shortened from days to minutes.

Eager to see how a custom AI agent in Spartner Mind can turbo-boost your processes? Send me a DM or book an informal coffee chat. I'd love to hear your ideas! ☕️

How does an agent differ from a traditional RPA bot?

In our experience: RPA executes hard rules, agents reason. An agent can invent new steps when faced with unexpected input instead of crashing. 😊

Which LLM is best for agents?

That depends on your tool-use requirements. GPT-4o excels at code generation, Claude 4 handles long context brilliantly, Grok 4 is currently the cheapest for large batch tasks. Always test for yourself!

Is open source risky from a security standpoint?

No, provided you self-host and scan the supply chain. We run LangGraph containers in an isolated VPC and implement rigorous code scans.

How do I prevent hallucinations?

1) Give your agent enough context (RAG). 2) Add tool-feedback loops. 3) Deploy guardrails in Spartner Mind that validate output.

What does it cost to get started?

We focus on value, not price tags here. Start with a small pilot and scale once ROI is proven.

Does my team need to learn prompt engineering?

A basic understanding helps. But good agent builders abstract 80 %. We provide workshops so devs grasp what happens under the bonnet. 🚀

Can I have multiple agents work together?

Absolutely! Multi-agent patterns (think AutoGen) split complex projects into roles like Researcher, Planner and Executor.

How do I measure success?

Define KPIs per use case: error rate, lead time, cost per ticket. What gets measured gets managed; we log everything in the observability dash.