Speed versus depth in AI coding

Grok-code-fast-1 or Claude Sonnet?

Speed versus depth in AI coding

Feeling swamped by the ever-expanding army of AI coders? I dove into the brand-new grok-code-fast-1 release and immediately pitted it against the well-known Claude 4 Sonnet. The verdict: both giants boast unique super-powers – and blind spots – that can radically reshape our daily dev workflow.

Does speed come at a price?

Two models, one mission: ship bug-free code faster

Grok-code-fast-1: ultra-fast response times, searches internally for a fix first and then spits out ready-to-run code.

Claude 4 Sonnet: slower, but walks you through its reasoning, making debugging and knowledge transfer easier.

Early community benchmarks (HumanEval, MBPP) show a neck-and-neck race: Grok scores slightly higher on Python tasks, while Claude keeps the lead on multi-language challenges.

Big difference: vision. Claude reads screenshots & wireframes; for now Grok is blind.

Actionable insights:

Use Grok for rapid prototyping or CLI scripts.

Call in Claude when understanding, refactoring or cross-language help is required.

Combine both for a “best of both worlds” pipeline.

Who shines where?

Practical pros and cons compared

Pure speed

In identical API tests (100 prompts, 500 tokens) Grok delivers answers on average 35-45 % faster than Claude – perfect for pair-programming in the terminal.

Analytical depth

Claude thinks aloud through the problem, context and edge cases: brilliant for complex refactors, but occasionally painfully verbose.

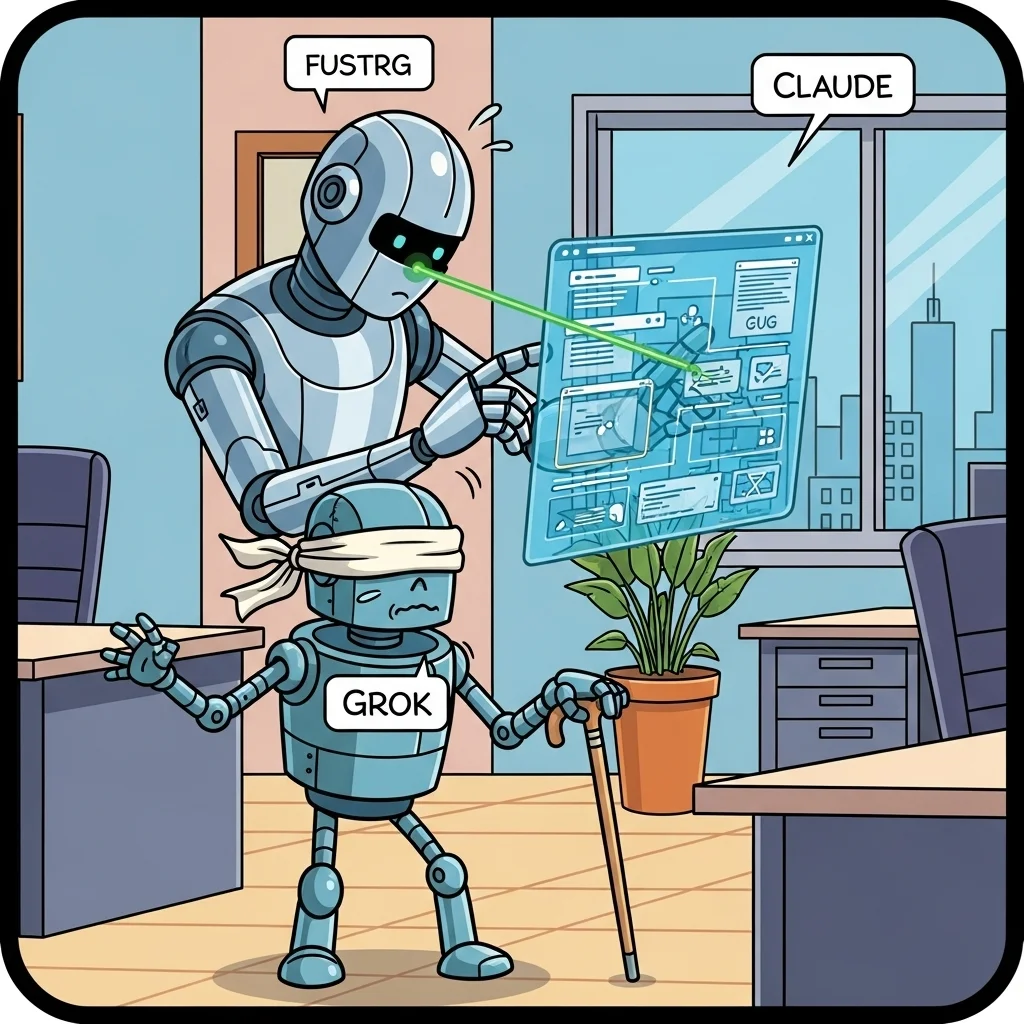

Vision support

Claude accepts image input (diagrams, stack traces, UI mock-ups). Grok doesn’t, so UX-related issues stay out of sight.

Stability

Grok rarely times out, but occasionally hallucinates library names. Claude can stumble in very long conversations, yet cites documentation more accurately.

How we squeeze the most out of them

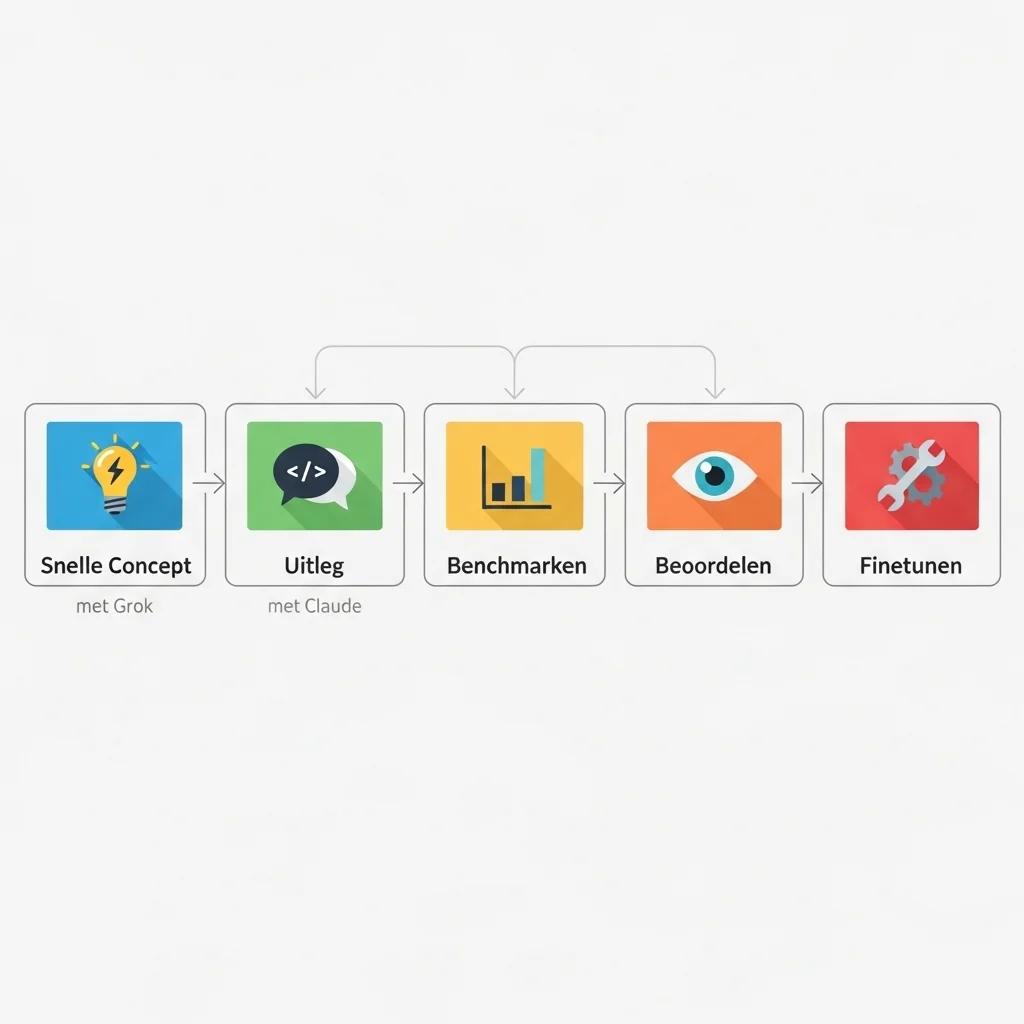

Prompt forking

Our workflow: show the same task to Grok first for a rough solution, then to Claude for explanation & optimisation.

Pro tip: mark code blocks as ``readonly`` in your prompt to stop both models rewriting identical mistakes.

Parallel benchmarking

We automate HumanEval-style tests in GitHub Actions. Every PR triggers both models.

Gotcha: keep timeouts and rate limits separate; Grok is faster but needs shorter context windows.

Review & merge

Claude’s detailed reasoning doubles as an “explain-commit”. We paste its analysis straight into the PR description.

Grok’s output stays pure code in diff view – nice and clean.

Vision fallback

For UI bugs we automatically switch over to Claude with a screenshot attached.

Handy trick: crop the image to the error message only – that speeds up Claude’s parsing time.

Continual tuning

Our experience: every fortnight we revise prompt templates based on fail cases, especially around Grok’s hallucinated imports.

Where did Grok-code-fast-1 come from all of a sudden?

A peek under the bonnet

Recently I was working on a CLI tool for log analysis. Compile time was killing me and I wanted to test a parallelisation strategy fast. Within seconds Grok blasted a working ``asyncio`` implementation onto my screen. No small talk, just code.

Under the hood, grok-code-fast-1 builds on the same Mixture-of-Experts architecture as Grok-1.5, but trimmed for latency:

```

# simplified pseudo-config

experts: 16

tokens-per-second: 300+

context-window: 32k

```

That impressive throughput is partly thanks to GPTQ-style quantisation kernels plus an aggressive speculative decoding pipeline. Result: lag feels virtually gone.

What’s interesting is that this focus on speed is a deliberate trade-off. Internal leaks (Aug ’25) show Grok scoring 85.2 % on HumanEval-Python, a slender 1.9 % above Claude Sonnet. But on multi-language benchmarks (MOSS, MBJP) Grok drops back to 77 %, while Claude hits 80 % thanks to its richer cross-lingual training mix.

Business impact

Wondering what this means for sprint planning? Faster ideation lets teams test more hypotheses in the same time slot. But if code reviews slow down because of opaque black-box output, you still gain nothing. Claude compensates by producing documentation-grade reasoning, boosting knowledge transfer among juniors. In short: pick your model based on team maturity.

Vision: the killer feature Grok lacks

Why screenshots matter

Imagine a client posting a blurry Slack screenshot of an error in staging. With Claude I simply paste the image into the prompt and boom – it recognises the stack trace, points to a mis-chosen Tailwind utility and proposes a patch. Grok, meanwhile, is literally in the dark.

At Spartner we built a workaround: OCR the screenshot, add the plain-text stack trace and feed that to Grok. Accuracy takes a clear hit though. Until xAI adds vision, this remains a serious bottleneck for teams doing heavy UI debugging.

Security & compliance

Interesting observation: Grok logs less conversation metadata according to xAI’s latest policy update (26 Aug ’25). That can be a plus for privacy-sensitive sectors. Claude, on the other hand, offers granular redaction tools, letting you mask sensitive strings inline. Don’t decide purely on performance – evaluate governance fit.

Live coding duel: a real-world example

Scenario

We ask both models: “Write a Laravel Artisan command that cleans up stale user sessions and sends a Slack notification.”

Result

Grok produces a compact class in 6 s, uses ``Illuminate\Support\Facades\Http`` for the Slack call but forgets retry logic.

Claude takes 11 s, explains why ``Guzzle`` is handier, immediately adds exponential back-off and even suggests a unit test.

My takeaway in practice: Grok is fantastic when you already have the context sharp and a senior will review the code later. Claude is that senior while you type.

Why use Grok if Claude is already “good enough”?

In our experience, speed is genuinely game-changing in pair-programming. You stay in the flow state because you’re not waiting minutes for output. Plus, Grok currently edges ahead on Python-specific benchmarks. 🚀

Is the lack of vision a deal-breaker?

Depends on your stack. If you’re mostly backend-oriented you’ll seldom miss vision. Building front-ends or mobile apps feels like typing with one hand without it.

I’ve heard Grok sometimes invents libraries. Is that true?

Yes. We’ve seen fictitious NPM packages pop up more often. Keep a strict CI check (``composer require --dry-run`` or ``npm info``) in your pipeline. Claude hallucinates less, but it’s not flawless.

Which model offers the larger context window?

Both sit around 32k tokens, but Claude maintains performance better towards the tail of the context. Grok becomes a bit repetitive above 25k.

Can I run both models in parallel without prompt conflicts?

Absolutely. Separate prompts logically: “fast-draft” for Grok, “explain-and-refine” for Claude. Diff-checking the results lets you spot hallucinations in a flash.

What about open-source alternatives?

Code-Llama 70B-Instruct and Phi-3-mini are strong free options, but score roughly 15-25 % lower on HumanEval. The gap is still noticeable for mission-critical production code.

Are the benchmarks you mention public?

We run HumanEval & MBPP weekly in-house. LMSYS Arena shows similar trends, though the percentages shift with each release.

Will I get the same result via the chat UI as through the API?

Not always. Grok’s API endpoints include inline system prompts that the chat UI hides. Test both variants before drawing conclusions. 😉

Have you already experimented with Grok or Claude? I’d love to hear your experiences! Share your wins (or frustrations) below, or reach out to me directly. Let’s discover together how we can make these AI mates even smarter. 💬