Overview

Event-driven architecture is a software architecture style in which systems respond to events rather than direct requests. This knowledge-base page explains the concept in a neutral way, covering definitions, building blocks, patterns and practical implications.

Key points in this article include the role of events as a first-class concept, the use of message brokers, the difference from traditional request-response architectures and the impact on domain modelling and data consistency.

What an event is and how event-driven architecture is structured

Core patterns such as publish-subscribe, event streaming and event sourcing

Typical advantages such as scalability and loosely coupled systems

Major challenges such as observability, error handling and schema evolution

The relationship with microservices, cloud platforms and integration with existing systems

The article concludes with a concise overview of the most important design trade-offs when applying event-driven architecture.

What is event-driven architecture

Event-driven architecture is an architectural style in which events, often simply called events, form the basis of communication between components or services. An event represents a fact that has already happened—for example, an order has been placed, a payment has been processed or a user has updated their profile. These events are captured and distributed to other parts of the system that want to react to them.

Unlike classic request-response architectures, such as a REST API that answers a direct request, event-driven architecture generally works asynchronously. A producer publishes an event to an infrastructure component—usually a message broker or event-streaming platform—after which one or more consumers receive and process it. The producer does not need to know who is listening, resulting in a low degree of coupling between systems.

Event-driven architecture is widespread in domains where near-real-time processing matters, such as financial transactions, IoT telemetry, monitoring, supply-chain logistics and web applications with rich user interaction. Modern cloud providers offer dedicated services for event processing, such as event buses, managed Kafka clusters and serverless event handlers.

Key concepts

Important terms in event-driven architecture include event, producer, consumer, topic or queue, and broker. An event describes the change in state of an entity, usually in the form of a message containing a payload, metadata and a schema or contract. The producer is the component that generates and emits the event—for example, a microservice that places an order. The consumer is a component that subscribes to a particular stream of events and executes logic against it.

A broker is the infrastructure layer that receives, stores and distributes events. Well-known types of brokers are message queues and log-based streaming platforms. A topic is a logical channel name on which events are published and consumed. By using topics and consumer groups, multiple services can work independently with the same event stream.

Beyond the basics, practice often distinguishes between notification events, which simply announce that something has happened, and state-transfer events, which include the relevant data of the changed entity as well. The choice between the two affects the level of dependency between services and the load on backing APIs and databases.

Architectural patterns within event-driven systems

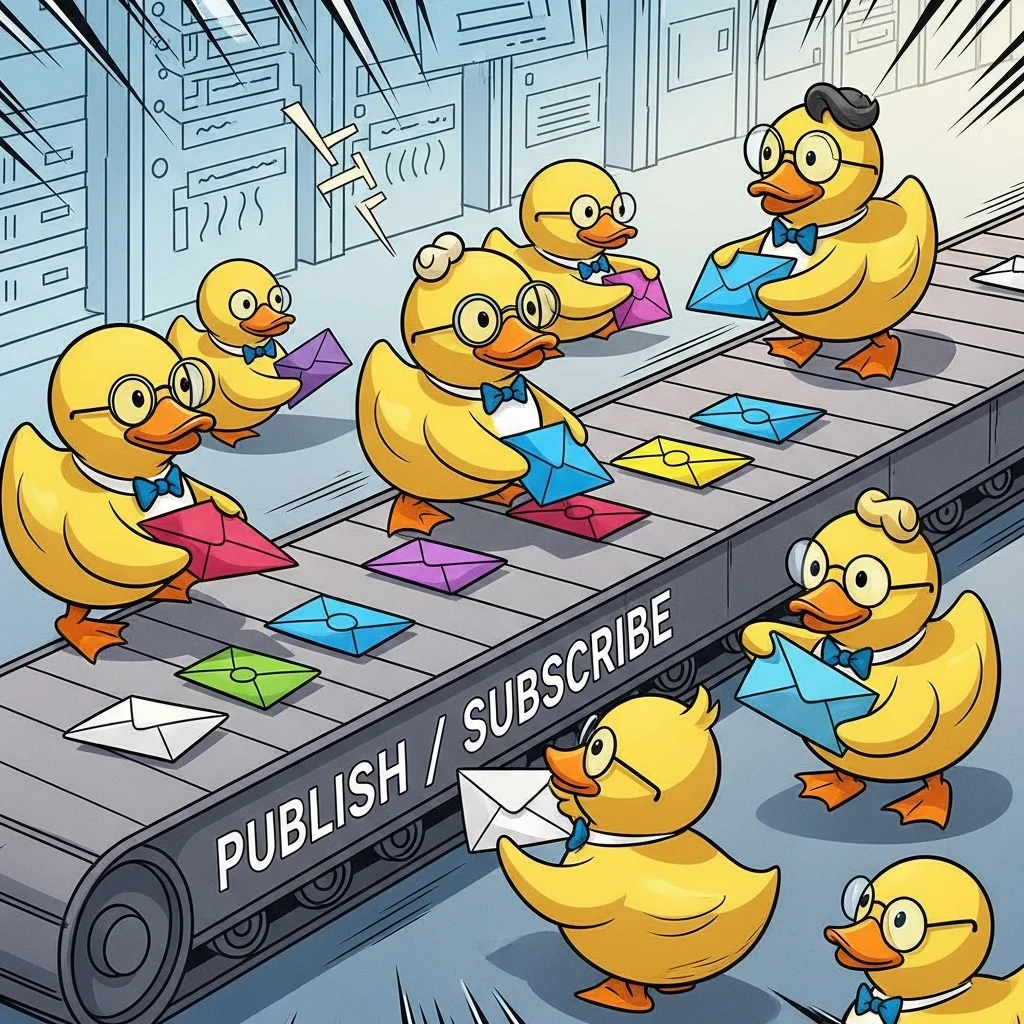

Event-driven architecture features several commonly used patterns that are often combined. One of the most familiar is the publish-subscribe pattern. In this pattern a producer publishes events to a topic, after which multiple consumers can subscribe without the producer being aware of them. This makes it easy to add new functionality later that listens to existing events, such as a notification service or an analytics component.

A second central pattern is event streaming. Here, events are not just offered temporarily but are stored for a longer period in an ordered log. Consumers read this log at their own pace and can rewind or reread, for example when rebuilding a cache or introducing a new type of processing. Event streaming supports scenarios such as real-time analytics, out-of-order processing and replaying historical data.

A third related pattern is event sourcing. Instead of storing the source state of a domain object as a current snapshot in a table, it is saved as a sequence of events that describe its entire lifecycle. The current state can be derived by applying all events in order. Event sourcing enables auditability, time-travel and the derivation of alternative views, but it requires careful modelling of domain events.

Within enterprise environments, event-driven patterns are often combined with command query responsibility segregation, or CQRS. In CQRS, writing data (commands) is separated from reading data (queries). Events act as the link between the write side and one or more read models. This can improve scalability and performance, especially when read traffic and write load differ greatly.

Integration with microservices and existing systems

Event-driven architecture aligns closely with microservices architecture. Instead of services calling each other directly via synchronous HTTP calls, part of the interaction is handled through events. This reduces mutual dependencies and lowers the risk of cascading failures. A service can complete its internal transaction and then publish a domain event, after which other services perform their own transactions based on that event.

Existing systems that were not originally event-oriented can still participate in an event-driven landscape. This is often done through change-data capture, where changes in a relational database are translated into events, or via adapters that generate events for specific actions, such as placing an order in a monolithic application. In this way, event-driven architecture can be introduced gradually without a full rebuild.

Cloud platforms usually offer managed services that support event-driven patterns, such as event buses, serverless functions that react to events, and storage for event logs. These make it easier to achieve scalable and resilient event flows without managing your own infrastructure. They often add extra features such as retry mechanisms, dead-letter queues and integration with monitoring.

A concise introduction to event-driven architecture can be found in various technical talks and conference videos. One example is the general introduction video on https://www.youtube.com/watch?v=STKCRSUsyP0, which illustrates the basic principles and use cases.

Advantages, disadvantages and design trade-offs

Event-driven architecture offers several benefits that carry weight in modern systems. A major advantage is the loosely coupled interaction between services. Because producers and consumers do not need to know each other directly, new functionality can be added without changing existing services. This supports agility and makes it easier to develop and deploy parts of the system independently.

A second benefit is scalability. Event-driven systems are essentially asynchronous and can buffer events. As a result, peak loads can be absorbed by scaling the processing capacity of consumers—for example, through auto-scaling containers or serverless functions. Moreover, event-streaming platforms are optimised for high throughput and large volumes of data, which is advantageous in data-intensive environments.

A third advantage is improved auditability and observability of system behaviour. Because events record what has happened, they provide a detailed trail of changes. This can be used for error analysis, compliance, reporting and machine-learning use cases. With event sourcing this is even stronger, as the full state change of domain objects is explicitly stored.

These benefits do, however, come with clear disadvantages and design challenges. A frequently cited challenge is the complexity of debugging and error handling in an asynchronous landscape. Problems often manifest further along an event chain, making it harder to link cause and effect directly. In addition, guarding end-to-end consistency and latency requires extra attention.

Data consistency, contracts and governance

Event-driven architecture has a major impact on the model for data consistency. Instead of strict, synchronous transactions across multiple services, systems usually work with eventual consistency. This means that not all components hold exactly the same state at every moment, but they converge over time. Designers must take this into account when modelling user interfaces, reports and integrations.

A crucial aspect is the management of event contracts and schemas. Producers and consumers must agree on the meaning of an event, its fields and their types. In practice this is often handled via schema registries with versioning, so that events can evolve backwards-compatibly without breaking existing consumers. Schema evolution requires strict agreements and automated validation in the CI/CD pipeline.

Governance in event-driven landscapes also covers topics such as idempotency, avoiding duplicate event processing, topic security and access control. Idempotent consumers can process an event multiple times without unintended duplicate actions, which is important for retries and fail-over. Events often need to be classified by sensitivity so that only authorised services gain access.

In hybrid environments where request-response and event-driven communication coexist, a clear integration architecture is needed. A common approach is to identify domain events that are sufficiently important to publish, while less critical interactions remain synchronous. This helps to reap the benefits of event-driven architecture without introducing unnecessary complexity.

What is the difference between event-driven architecture and a classic layered architecture?

Frequently asked questions about event-driven architecture In a classic layered architecture, components usually communicate synchronously through direct calls, often in a strict sequence of presentation, business logic and data. In event-driven architecture, events are the central communication mechanism and components respond asynchronously to occurrences. This reduces direct coupling between layers and services, which can increase flexibility and scalability.

Does event-driven architecture mean everything has to be asynchronous?

No. In practice, event-driven communication is often combined with synchronous communication such as REST or gRPC, depending on the interaction type. Critical flows that require immediate feedback to a user often remain partly synchronous, while derived actions or integrations are handled via events. Designing a deliberate mix of both, suited to the functional and non-functional requirements, is common.

Is event sourcing the same as event-driven architecture?

Event sourcing is a specific pattern in which the state of entities is stored as a sequence of events rather than as a snapshot. Event-driven architecture is a broader architectural style that uses events as a communication mechanism between components. Event sourcing can be applied within an event-driven architecture, but it is not a prerequisite. Many systems use events without having their internal storage event-sourced.

How does event-driven architecture relate to microservices?

Microservices often benefit from event-driven communication because it reduces mutual dependence and supports independent deployability. Instead of complex chains of synchronous service calls, a service publishes a domain event after a successful transaction. Other services then react with their own logic. This helps limit chain reactions during failures and makes per-service scaling easier.

Which tools and platforms are commonly used for event-driven architecture?

Frequently used infrastructure components include message brokers and streaming platforms that support topics, consumer groups and event storage. Cloud providers also offer specialised services for event routing, serverless consumers and monitoring. Tools for schema registration, observability and tracing are applied to maintain visibility into event streams, error handling and performance across multiple systems.

Is event-driven architecture suitable for every type of application?

Not every application benefits equally from an event-driven approach. Applications with a great deal of real-time interaction, integrations with multiple systems and a need for scalable processing are often good candidates. For small, relatively isolated systems, the extra complexity of event infrastructure may be less worthwhile. Many organisations end up with a mixed landscape, in which only the parts with clear advantages are set up in an event-driven way.

How do you handle debugging in an event-driven system?

Debugging in an event-driven environment usually requires greater attention to logging, correlation identifiers and distributed tracing. By adding metadata to each event—such as a correlation ID and causation ID—you can follow the route of an occurrence through the system. Central observability solutions that combine logs, metrics and traces from producers and consumers further help to provide insight into the chain.