Sora 2 marks a leap forward in AI-generated video, allowing creators and businesses to gaze beyond traditional production. In this article I explain exactly what Sora 2 is and share three recent video examples, including the precise YouTube links that demonstrate its possibilities in practice.

Have you marvelled at Sora 2 yet?

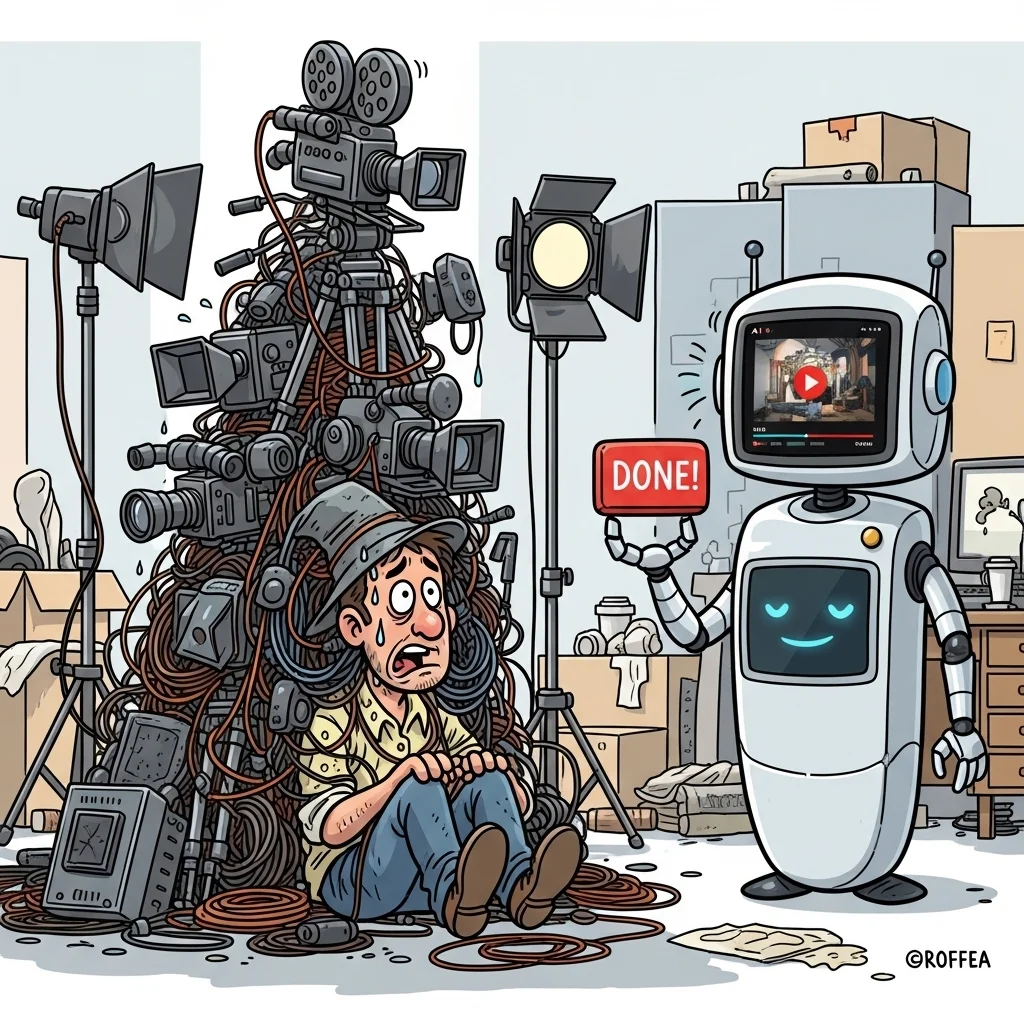

What struck me immediately at the launch of Sora 2 is how quickly video production is shifting from a specialist process to something a single person can use to produce impressive results in no time. Recent demos and walkthroughs showcase both stunning creativity and very real risks around misuse and copyright.

Key takeaways

Sora 2 is a text-to-video model from OpenAI that can generate realistic short clips, complete with sound and complex motion.

The latest demos show a greatly improved grasp of physics, expression and style transfer, yet also reveal 'slop' and unwanted deepfakes.

Creators combine Sora 2 with other AI tools to build workflows that pump out viral content at speed.

Actionable insight

What is Sora 2?

Sora 2 is OpenAI's latest text-to-video model, capable of producing short, coherent videos from text prompts and reference images. Compared with earlier video models, Sora 2 shows far better understanding of motion, facial expression and basic physics, making scenes look and sound more realistic.

Its importance becomes clear fast: tasks that once demanded hours of shooting and VFX can now be tested in minutes. That doesn’t mean everything is perfect, but the journey from concept to proof-of-concept is radically shorter. For creatives that spells huge freedom; for businesses it unlocks new marketing and content strategies.

A great place to start is OpenAI's official demo, which shows what Sora 2 can do and how its makers present it. Watch the intro video below to get a feel for the possibilities and limitations:

Sora 2 is designed to handle a range of styles, from photorealistic to cartoon, and it uses reference images for style transfer. That makes it useful for product demos, short narrative clips and social content, although it still requires human steering and quality control.

Example: official demo and what it reveals

The official Sora 2 presentation is compelling because OpenAI showcases various examples that immediately highlight the technical gains: better lip-sync, more realistic object movement and audio integration. The demo includes short narrative scenes as well as technical showcases where Sora 2 simulates more complex actions and camera moves.

What stands out is the level of control a prompt designer can achieve: you specify not only the scene but also the style, perspective and timing of sound effects. The demo therefore offers a practical starting point for grasping which prompts work and how to iterate towards better results.

Example: creators pushing boundaries and viral clips

Soon after release, countless creator videos appeared putting Sora 2 through its paces. In one in-depth review an experienced creator compares dozens of short tests, including style transfer, anime-style fight scenes and reference uploads for cameo clips. Such examples reveal what’s technically possible—and which glitches or odd artefacts still pop up.

These videos are instructive because they provide practical test cases: how does Sora 2 respond to physics instructions, how coherent do faces remain during fast motion, and how well does audio match lip-sync in different languages? For developers and content makers these breakdowns are invaluable when deciding how to deploy Sora 2 reliably in repeatable workflows.

Example: productive workflows and viral strategies

Some creators show not only what Sora 2 can do but also how to build a productive workflow around it. One example video outlines a three-step approach: researching formats with search agents, generating concepts with a large language model, and finally producing in Sora 2. This is a classic content-first method that marketers and start-up operators are keen to replicate.

What’s interesting is that this workflow shows how Sora 2 becomes part of a chain of tools: each piece fills a need in the production process and lets you test multiple concepts before polishing one. The video includes concrete prompt examples and showcase tests that demonstrate how short iterations can lead to viral clips.

What exactly is Sora 2, in one sentence?

Sora 2 is a text-to-video model that can generate short, coherent video clips from text prompts and reference images, complete with sound and improved motion, enabling rapid video prototyping—based on our experience it’s a huge accelerator for concept work.

Can Sora 2 recreate realistic people or celebrities?

Technically yes—Sora 2 can produce realistic images and voices—but that raises legal and ethical issues. In our experience it’s essential to secure permission and rights before using recognisable individuals or protected content 😊.

Are the results instantly publishable on social media?

Some clips definitely are, particularly short formats. However, you should scrutinise artefacts, audio sync and rights to reference images. Always test on multiple devices and platforms before scaling up.

How can you use Sora 2 in a content workflow?

Combine Sora 2 with research and prompt tools: explore trending formats, generate concepts with an LLM, refine prompts and produce iteratively in Sora 2. From our experience this chain works best when you start small and validate quickly, then scale using A/B testing and metrics.

What limitations do you still see with Sora 2?

Physics and complex interactions are better but not perfect. Hands and certain movements can still look unnatural, and long-form narratives need plenty of editing. Content moderation and abuse prevention also remain important.

What about copyright and moderation?

OpenAI and platforms have recently made adjustments to improve rights handling and moderation, but responsibility rests with creators and businesses using Sora 2. Pay close attention and document sources and licences to avoid trouble later.

Is Sora 2 suited to business videos, such as product demos?

Yes—Sora 2 is very useful for short product demos and concept videos. In our experience it delivers fast, low-cost prototypes that you can then refine with traditional production if needed.

Where can I find more practical tutorials and inspiration?