Overview of AI agents and tool calling

AI agents are increasingly promoted as the next step after chatbots, but what exactly does that mean on a technical level and how does tool calling work in detail? This article removes the hype from the terminology and reduces everything to concrete, understandable building blocks.

Key takeaways in this article:

Definition of AI agents and the difference from traditional chatbots

Explanation of tool calling, function calling, and how LLMs carry out actions

Architectures such as a single agent with multiple tools and multi-agent systems

Typical use cases in software development and business processes

Important considerations around security, error handling, and observability

By the end you will be able to:

Correctly position the key concepts surrounding AI agents

Explain how tool calling works under the hood

Better assess which architecture suits a particular use case

Ask targeted questions of developers or vendors about agent-based solutions

What exactly are AI agents

The term AI agent is used in many ways, but at its core it refers to a software component that is given a goal, makes decisions independently, and performs actions through tools or systems. A modern AI agent usually combines a large language model with logic for memory, planning, and tool usage.

An AI agent differs from a traditional chatbot because it does not merely generate text; it can actually take steps within a process. Think of creating a ticket, performing a search in an external database, or scheduling an appointment. The agent interprets the user’s intent, chooses which tool it needs, and then calls that tool via a defined interface.

Technical literature often distinguishes several variants. A reactive agent mainly responds to input without extensive planning. A reflective or planning agent can think several steps ahead, formulate sub-goals, and evaluate its own intermediate results. In many modern solutions these concepts are combined, leading to agent frameworks where reasoning, memory, and tool calling are tightly integrated.

AI agents are typically driven by an LLM that serves as the decision engine. The model receives system instructions, context, and a description of available tools. On that basis the model generates the next step, for example a tool call or a direct textual answer. This pattern appears in various platforms for agent orchestration and in the function-calling interfaces offered by the major LLM providers.

Tool calling and function calling explained

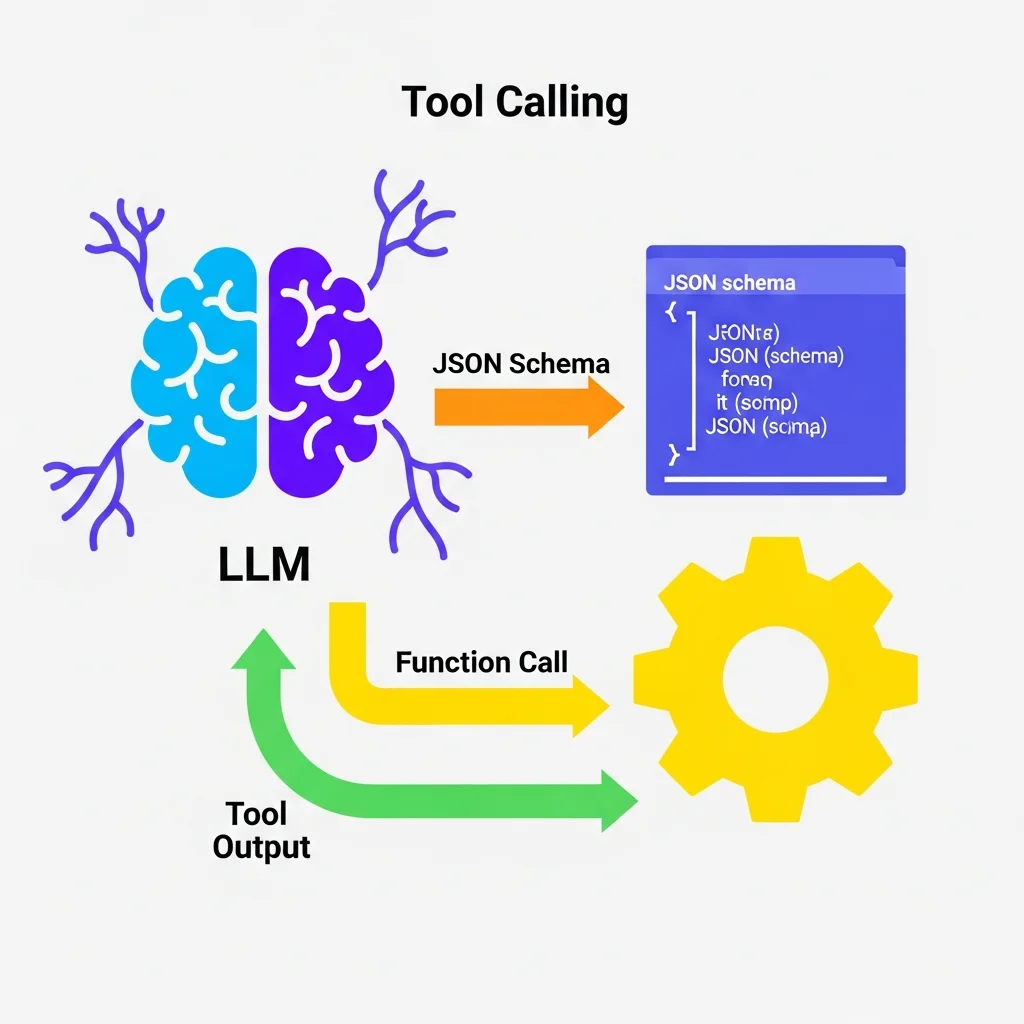

Tool calling, also known as function calling, is a mechanism in which an LLM not only produces text but also returns structured instructions that an application can execute. The model might choose a function `search_documents` with specific parameters, and the application executes that function in real code.

In practice, this is set up by defining tools as functions with a name, description, and parameter schema. This schema is often JSON based, making it clear which fields are required and which types are expected. The LLM receives these tool definitions as part of the prompt. If the agent decides that a tool is needed, it generates a structured object instead of ordinary text. The application recognises this as a tool call, executes the function, and returns the result to the model in the next iteration.

A simplified example of a tool definition looks like this:

{

"name": "create_support_ticket",

"description": "Create a new support ticket in the system",

"parameters": {

"type": "object",

"properties": {

"subject": { "type": "string" },

"priority": { "type": "string", "enum": ["low", "normal", "high"] },

"customer_id": { "type": "string" }

},

"required": ["subject", "customer_id"]

}

}When the agent decides to use this tool, the LLM will generate a structured call containing the correct fields. The host application then executes the underlying logic, for instance an API call to a ticketing system. The result, such as the new ticket number, is returned to the model as an observation. The agent can then include this in the conversation with the user or use it as input for subsequent steps.

Tool calling often supports multiple rounds. The agent can call a series of tools one after another, depending on task complexity. This forms the basis for agentic workflows in which an agent first retrieves data, then performs a calculation, and finally compiles a report. Many modern LLM implementations therefore include support for tool usage, planning, and limited memory, making such scenarios practically feasible.

Architectures with AI agents and tools

In practice, several basic architectures are distinguished for AI agents and tool calling. A common pattern is the single agent with multiple tools. In this model one agent gains access to a set of tools, for example a search function, an email system, and a CRM API. The agent selects the relevant tool at each step and remains responsible for the entire task from start to finish.

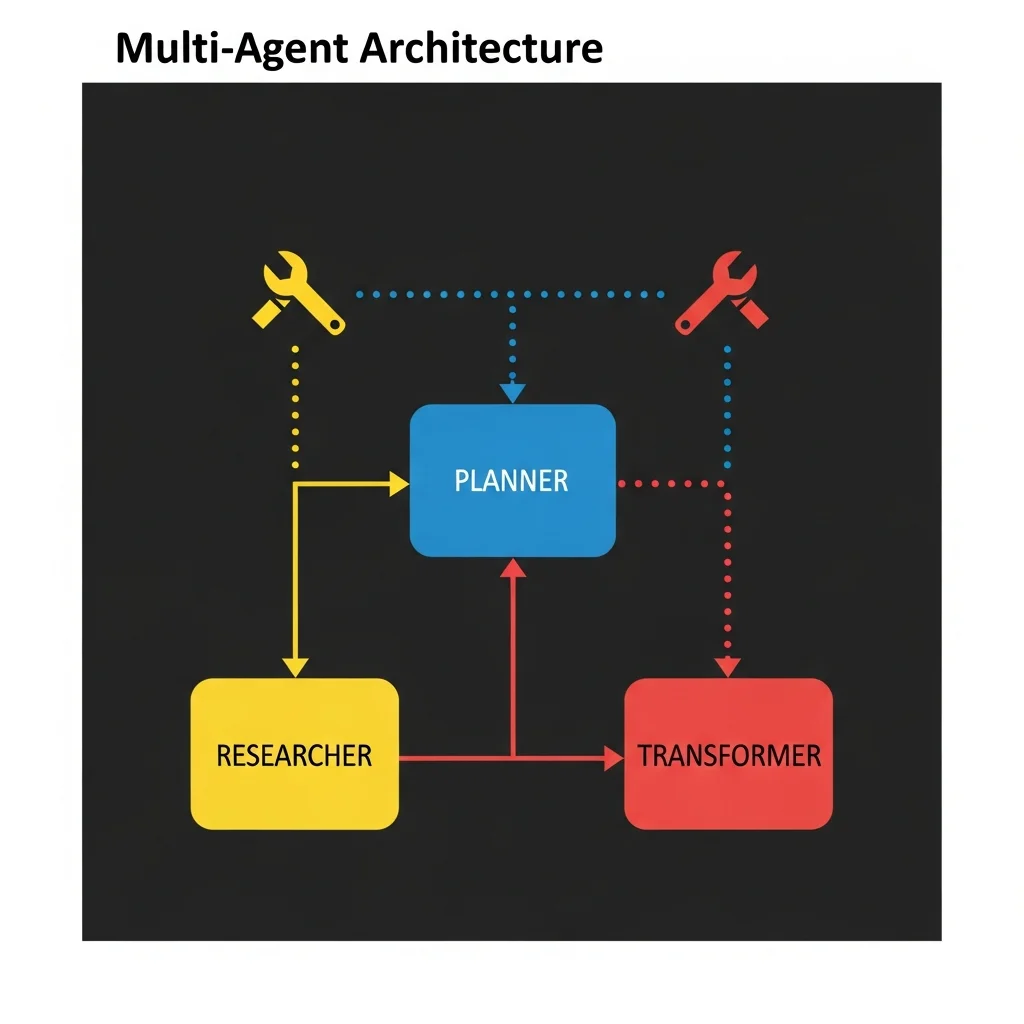

Another architecture is the multi-agent system, where multiple specialised agents work together. A planning agent might break the task into sub-tasks and pass them on to specialist agents, such as a research agent, a data transformation agent, and a reporting agent. These agents communicate via messages, with an orchestrator or router deciding which agent acts when. This pattern is often used for complex workflows across several domains or when scalability and fault isolation are important.

The choice between single agent and multi-agent is usually determined by complexity and maintainability. For relatively straightforward business processes, a well-defined single agent architecture is often sufficient and easier to test. For larger scenarios, such as end-to-end customer processes across multiple systems, a multi-agent setup with clear responsibilities per agent can be more transparent. It also becomes easier to monitor, log, and improve agents individually.

Observability and control are key concerns in software architecture. Every tool call forms a boundary between the LLM and the real world. Production environments therefore often include a middleware layer that logs, validates, and if necessary enriches all tool calls. Guardrails are also applied, for example by defining permissions per tool and by validating input and output. This makes it clear which actions an agent performs and prevents or rolls back unwanted actions.

Applications, quality assurance, and security aspects

AI agents with tool calling are used in a wide range of domains, from customer service to document processing and software development. In customer service scenarios, for example, an agent can retrieve customer data, amend orders, or prepare a refund. In document-driven processes an agent can analyse files, extract relevant parts, and generate draft documents, with tools providing access to document storage, OCR, and workflow systems.

Quality assurance for agent-based systems requires a combination of classical software testing methods and new evaluation techniques for LLM behaviour. Unit and integration tests are supplemented with scenario-based evaluations, where the agent is confronted with realistic dialogues and edge cases. Evaluation looks not only at the content of answers but also at tool usage, the order of steps, and adherence to predefined policies.

Security is central to tool calling because the agent gains access to systems that can make changes or read sensitive data. Production systems therefore use explicit authorisation models per tool. A tool might have read-only rights or be limited to a particular customer segment. Input sanitisation, rate limiting, and auditing of every tool call are standard measures to reduce misuse or errors.

Finally, attention is needed for privacy and data minimisation. Agents often work with context windows that load information into the model temporarily. By pseudonymising data, masking fields, and sharing only strictly necessary information, legal and organisational requirements can be met. Modern implementations therefore design logs and conversation history carefully, with clear retention policies and the ability to retract or anonymise specific interactions.